Cross-domain Open-world Discovery

CROW is a cross-domain open-world discovery method that enables automatic assignment of samples to seen classes and discovery of novel classes under a domain shift. CROW introduces a cluster-then-match strategy enabled by a well-structured representation space of foundation models.

The vast majority of machine learning models are developed within a closed-world paradigm, assuming that training and test data originate from a predetermined set of classes within the same domain. This assumption is overly restrictive in many real-world scenarios. For example, a model trained to categorize diseases in medical images from one hospital may experience domain shifts when applied to images from different hospitals. Moreover, during model deployment, novel and rare diseases may emerge that the model has never seen during training. In the open-world scenario, the model should have the capability to generalize beyond predefined classes and domains, a departure from the closed-world scenario often presumed in traditional approaches.

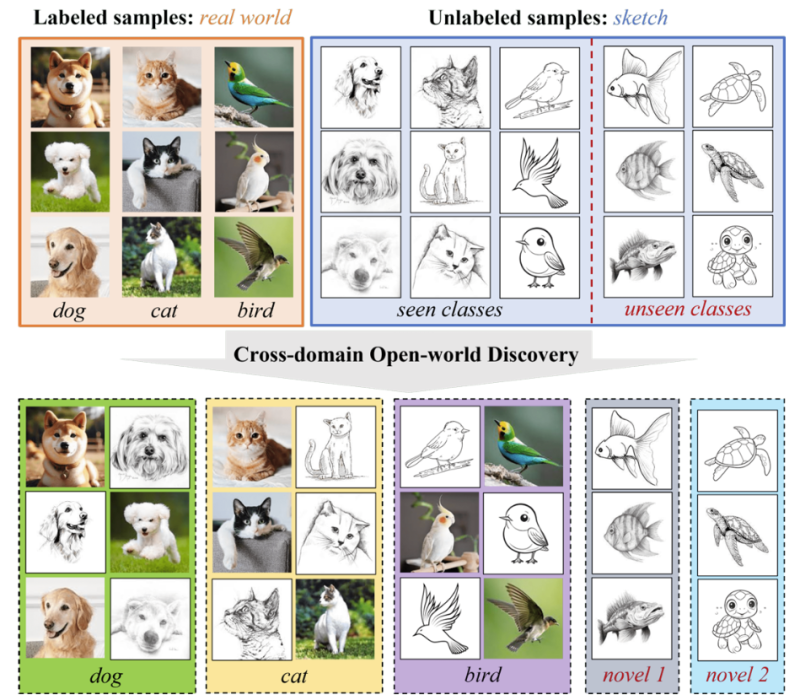

In this work, we consider a CrossDomain Open-World Discovery (CD-OWD) setting. In this setting, the objective is to assign samples to pre-existing (seen) classes while simultaneously being able to discover new (unseen) classes under a domain shift.

Publication

Cross-domain Open-world Discovery.

Shuo Wen, Maria Brbić.

International Conference on Machine Learning (ICML), 2024.

@inproceedings{shuo2024crow,

title={Cross-domain Open-world Discovery},

author={Wen, Shuo and Brbi’c, Maria},

booktitle={International Conference on Machine Learning},

year={2024},

}

Our approach: CROW

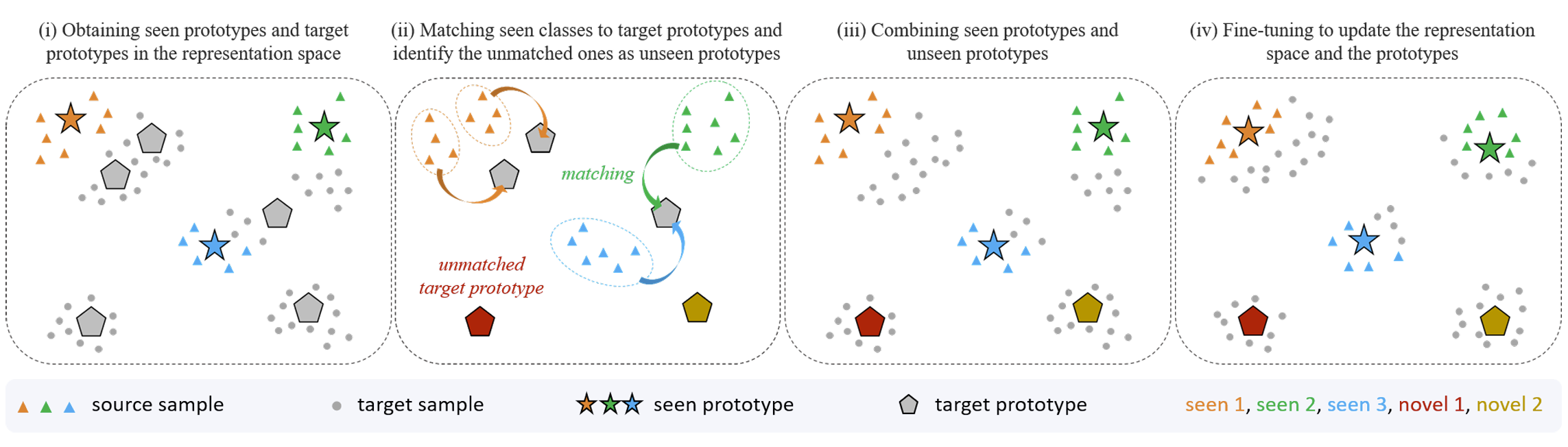

To overcome the challenges of cross-domain open-world discovery, we propose CROW (Cross-domain Robust Open-World-discovery), a method that employs a cluster-then-match approach, leveraging the capabilities of foundation models. The key idea in CROW is to utilize the well-structured latent space of foundation models to first cluster the data and then use a robust prototype-based matching strategy. This matching strategy enables CROW to associate multiple target prototypes with seen classes, thereby alleviating the issues of over-clustering and under-clustering. After matching prototypes, CROW combines cross-entropy loss applied to source samples with entropy maximization loss applied to target samples to further improve the representation space.

CROW achieves state-of-the-art cross-domain open-world discovery performance.

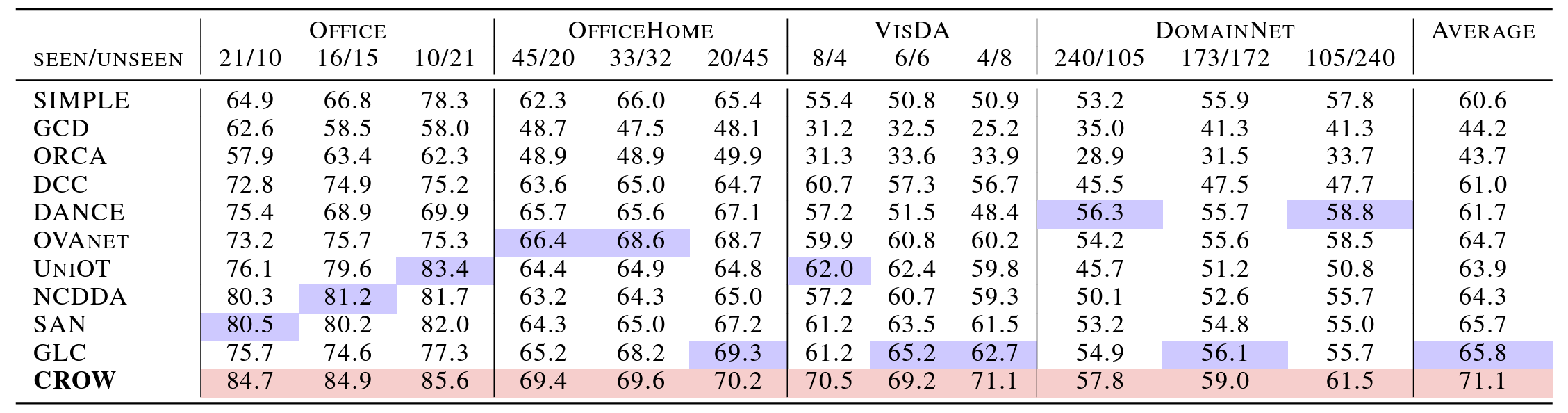

We evaluate CROW across 75 different categorical-shift and domain-shift scenarios created from four benchmark domain adaptation datasets for image classification. The results demonstrate that our approach outperforms open-world semi-supervised learning and universal domain adaptation baselines by a large margin. Specifically, CROW outperforms the strongest baseline GLC by an average of 8% on the H-score.

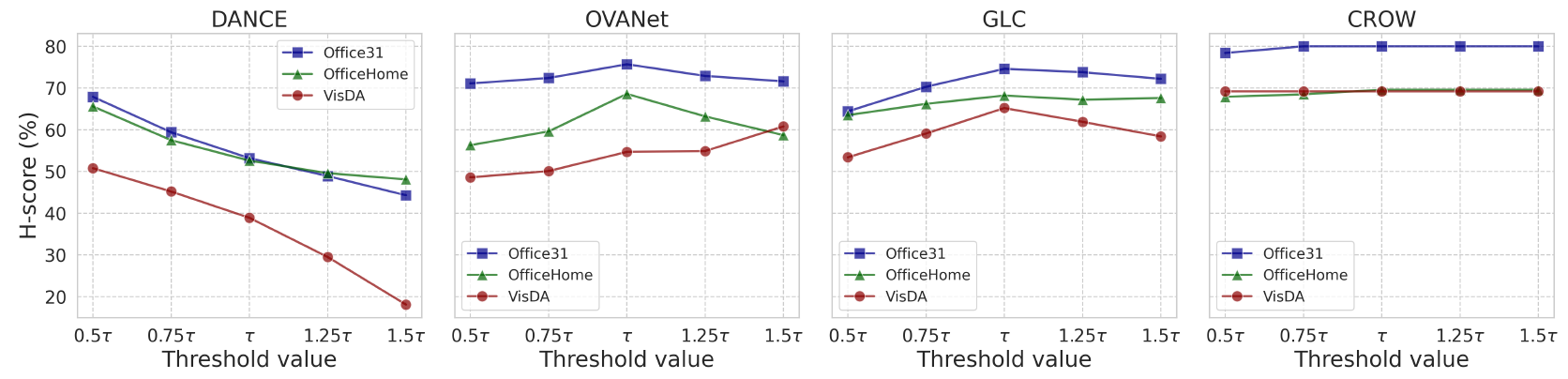

CROW is robust to different unseen class discovery thresholds.

A threshold is always needed to separate seen and unseen classes under the assumption of categorical shift. However, in the setting of cross-domain open-world discovery, finding the optimal threshold using validation sets is not feasible because the domain gap between labeled and unlabeled samples prevents the creation of validation sets that accurately reflect the target domain. Thus, the sensitivity to the threshold is crucial to the methods. Here, we show that CROW is extremely robust to the threshold variations.

Code

A PyTorch implementation of CROW is available on GitHub.

Checkpoints

| File | Description |

| prototypes.zip | Source and target prototypes |

Datasets

We use standard benchmark domain adaptation datasets:

Office-31

OfficeHome

VisDA

DomainNet

Contributors

The following people contributed to this work: