The Pursuit of Human Labeling: A New Perspective on Unsupervised Learning

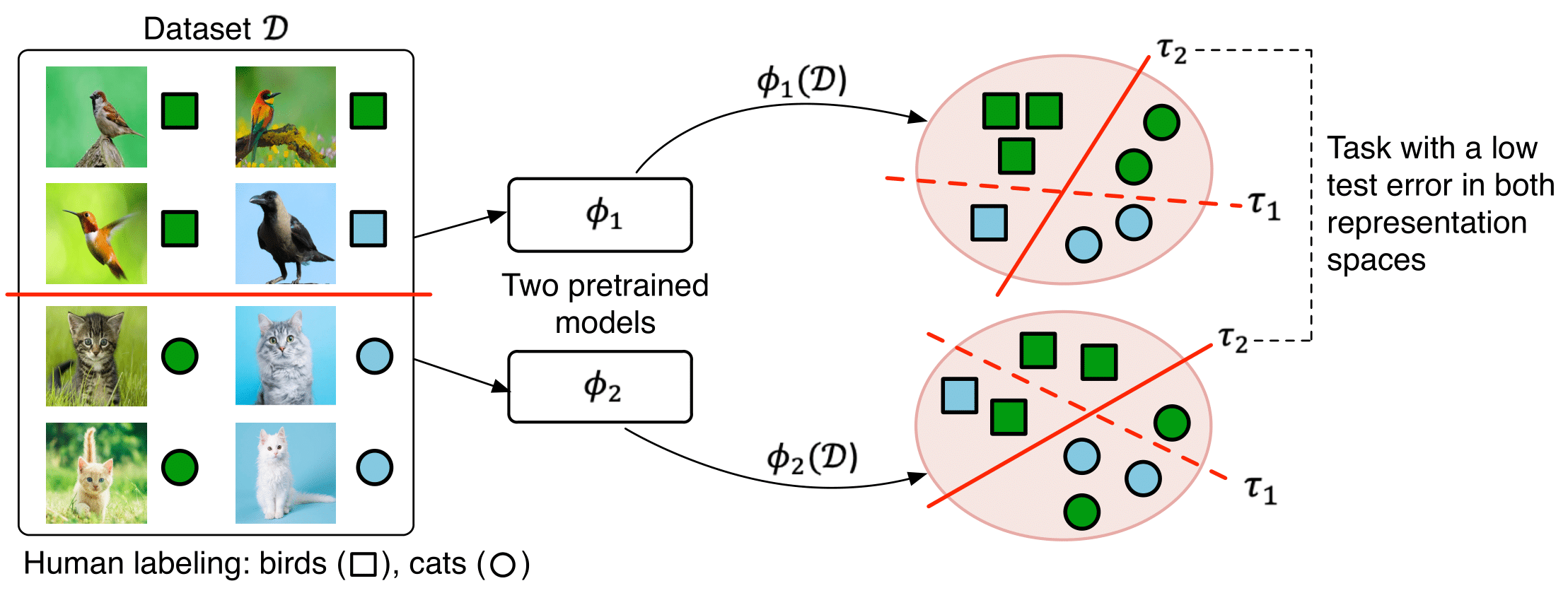

HUME is a model-agnostic framework for inferring human labeling of a given dataset without any external supervision. HUME provides a new view to tackle unsupervised learning by searching for consistent labelings between different representation spaces.

The key insights underlying our approach are that: (i) many human labeled tasks are linearly separable in a sufficiently strong representation space, and (ii) although deep neural networks can have their own inductive biases that do not necessarily reflect human perception and are vulnerable to fitting spurious features, human labeled tasks are invariant to the underlying model and resulting representation space. We utilize these observations to develop the generalization-based optimization objective and use this objective to guide the search over all possible labelings of a dataset to discover an underlying human labeling. In effect, we only train linear classifiers on top of pretrained representations that remain fixed during training, making our framework compatible with any large pretrained and self-supervised model. Altogether, our work provides a fundamentally new view to tackle unsupervised learning by searching for consistent labelings between different representation spaces.

Publication

The Pursuit of Human Labeling: A New Perspective on Unsupervised Learning.

Artyom Gadetsky, Maria Brbić.

Advances in Neural Information Processing Systems (NeurIPS), 2023.

@inproceedings{

gadetsky2023pursuit,

title={The Pursuit of Human Labeling: A New Perspective on Unsupervised Learning},

author={Gadetsky, Artyom and Brbi\’c, Maria},

booktitle={Advances in Neural Information Processing Systems},

year={2023},

}

Overview of HUME

HUME utilizes pretrained representations and linear models on top of these representations to assess the quality of any given labeling. As a result, optimizing the proposed generalization-based objective leads to labelings which are strikingly well correlated with human labelings.

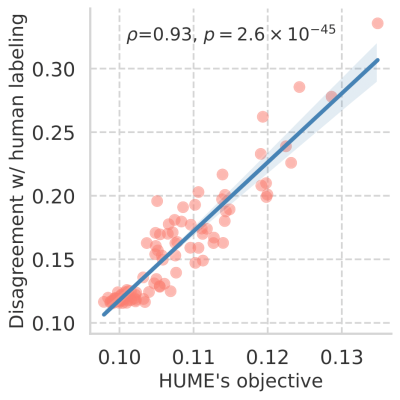

HUME objective is strikingly well-correlated with the human labeling.

Below, you can see the correlation plot between distance to ground truth human labeling and HUME’s objective on the CIFAR-10 dataset. HUME generates different labelings of the data to discover underlying human labeling. For each labeling (data point on the plot), HUME evaluates generalization error of linear classifiers in different representation spaces as its objective function. HUME ’s objective is strikingly well correlated (ρ = 0.93, p = 2.6 × 1e-45 two-sided Pearson correlation coefficient) with a distance to human labeling. In particular, HUME achieves the lowest generalization error for tasks that almost perfectly correspond to human labeling, allowing HUME to recover human labeling without external supervision.

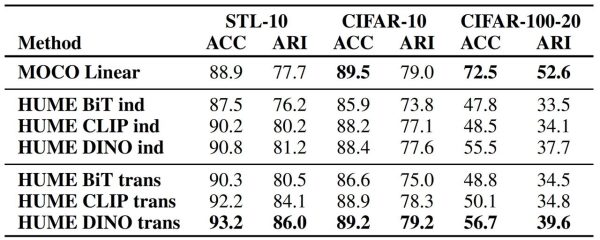

HUME, being fully-unsupervised, can perform on par with supervised methods.

Since HUME trains linear classifiers on top of pretrained self-supervised representations, supervised linear probe on top of the same self-supervised pretrained representations is a natural baseline to evaluate how well the proposed framework can match the performance of a supervised model. Thus, we train a linear model using ground truth labelings on train split and report the results on the test split of the corresponding dataset. HUME can be trained in inductive and transductive settings: inductive (ind) corresponds to training on the train split and evaluating on the held-out test split, while transductive (trans) corresponds to training on both train and test splits. We trained HUME in both inductive and transductive settings with MOCO representations as the first space and representations from different large pretrained models (BiT, CLIP, DINO) as the second space and compared it to the supervised linear probe. The results in the table below show that, remarkably, without using any supervision, on the STL-10 dataset HUME consistently achieves better performance than the supervised linear model in the transductive setting, and using CLIP and DINO in the inductive setting. In addition, on the CIFAR-10 dataset, HUME achieves performance comparable to the linear classifier.

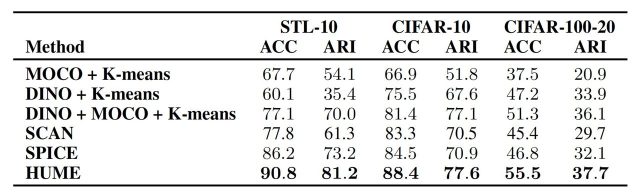

HUME achieves state-of-the-art unsupervised learning performance.

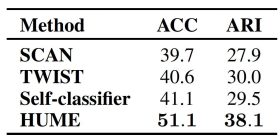

We compare performance of HUME to the state-of-the-art deep clustering methods. The results show that HUME consistently outperforms all baselines by a large margin. On the STL-10 and CIFAR-10 datasets, HUME achieves 5% improvement in accuracy over the best deep clustering baseline and 11% and 10% in ARI, respectively. On the CIFAR-100-20 dataset, HUME achieves remarkable improvement of 19% in accuracy and 18% in ARI. Overall, our results show that the proposed framework effectively addresses the challenges of unsupervised learning and outperforms other baselines by a large margin.

HUME scales to the challenging large-scale ImageNet-1000 benchmark.

We also study HUME’s performance on the ImageNet-1000 large-scale benchmark and compare it to the state-of-the-art deep clustering methods on this large-scale benchmark. The results in the table below show that HUME achieves remarkable 24% relative improvement in accuracy and 27% relative improvement in ARI over considered baselines, thus confirming the scalability of HUME to challenging fine-grained benchmarks.

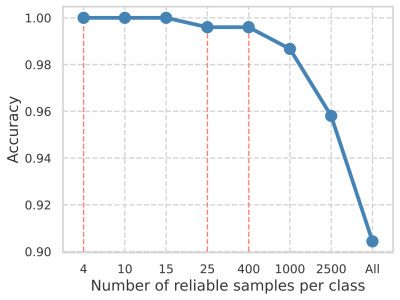

HUME can produce reliable samples for semi-supervised learning (SSL).

HUME can be used to reliably generate labeled examples for SSL methods. By generating a few reliable samples per class (pseudo-labels), an unsupervised learning problem can be transformed into an SSL problem. Using these reliable samples as initial labels, any SSL method can be applied. HUME can produce such reliable samples in a simple way. Specifically, we say that a sample is reliable if (i) the majority of the found labelings assigns it to the same class, and (ii) the majority of the sample neighbors have the same label. Figure below shows the accuracy of the generated reliable samples on the CIFAR-10 dataset. In the standard SSL evaluation setting that uses 4 labeled examples per class, HUME produces samples with perfect accuracy. Moreover, even with 15 labeled examples per class, reliable samples generated by HUME still have perfect accuracy. Remarkably, in other frequently evaluated SSL settings on the CIFAR-10 dataset with 25 and 400 samples per class, accuracy of reliable samples produced by HUME is near perfect (99.6% and 99.7% respectively).