With Limited Data for Multimodal Alignment, Let the STRUCTURE Guide You

STRUCTURE is a framework for building multimodal models in low-data regimes by aligning frozen unimodal foundation models, enabling strong performance on zero-shot classification and retrieval tasks. It introduces a simple, plug-and-play regularization technique that preserves the geometric structure of each modality’s latent space and aligns layers with the highest representational similarity across modalities.

Multimodal models have demonstrated powerful capabilities in complex tasks requiring multimodal alignment including zero-shot classification and cross-modal retrieval. However, existing models typically rely on millions of paired multimodal samples, which are prohibitively expensive or infeasible to obtain in many domains. In this work, we explore the feasibility of building multimodal models with limited amount of paired data by aligning pretrained unimodal foundation models. We show that high-quality alignment is possible with as few as tens of thousands of paired samples—less than 1% of the data typically used in the field. To achieve this, we introduce STRUCTURE, an effective regularization technique that preserves the neighborhood geometry of the latent space of unimodal encoders. Additionally, we show that aligning last layers is often suboptimal and demonstrate the benefits of aligning the layers with the highest representational similarity across modalities. These two components can be readily incorporated into existing alignment methods, yielding substantial gains across 24 zero-shot image classification and retrieval benchmarks, with average relative improvement of 51.6% in classification and 91.8% in retrieval tasks.

Publication

With Limited Data for Multimodal Alignment, Let the STRUCTURE Guide You

Fabian Gröger*, Shuo Wen*, Huyen Le, Maria Brbić.

@inproceedings{groeger2025structure,

title={With Limited Data for Multimodal Alignment, Let the STRUCTURE Guide You},

author={Fabian Gr\"oger and Shuo Wen and Huyen Le and Maria Brbi\'c},

year={2025},

booktitle={NeurIPS},

url={https://arxiv.org/abs/2506.16895},

}

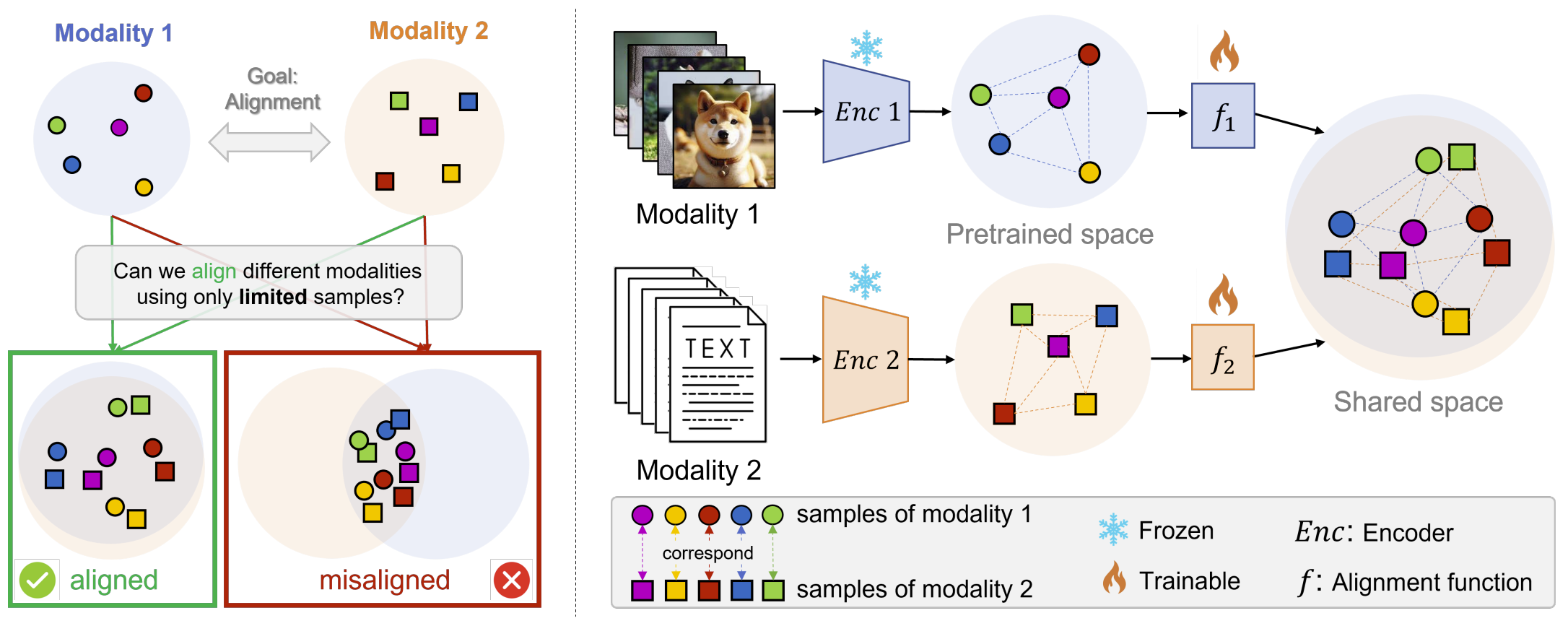

Key idea: preserving the pretrained latent structures

The objective is to align representations from two modalities (e.g., images and text) into a shared embedding space. The central challenge is guiding the model toward a well-aligned solution, rather than a misaligned one due to overfitting, when only a small amount of paired data is available. The key idea is to freeze pretrained encoders and learn lightweight alignment functions that preserve each modality’s pretrained latent structure during alignment.

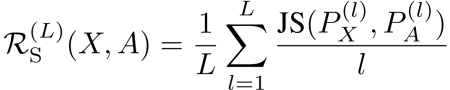

Method Part I: Preserving the neighborhood via STRUCTURE regularization

With a limited number of paired samples, it is crucial to preserve the latent structure of pretrained unimodal encoders that are trained on millions or even billions of examples, as they encode meaningful relationships between samples. To achieve this, we introduce STRUCTURE, a regularization term that preserves the neighborhood relationships of the pretrained space of unimodal encoders within the aligned space. The equation is as follows, where X is the pretrained space and A is the aligned space.

After incorporating the regularization terms into the existing alignment objective function, the final objective function is as follows (existing alignment function + two regularization terms for the two modalities).

More details can be found in our paper Section 4, paragraph Preserving neighborhood via STRUCTURE regularization.

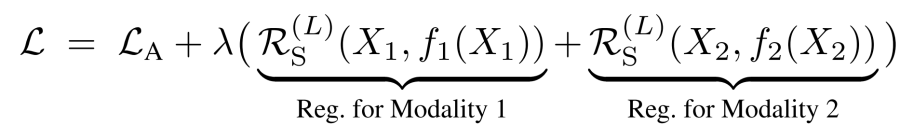

Method Part II: Similarity-based layer selection

In parameter-frozen alignment, the quality of alignment is closely linked to the representational similarity between the unimodal representation space. Therefore, selecting the appropriate layers for alignment is critical, instead of always using the last layer (stars in the Figure represent the last layer). Therefore, we propose to use mutual KNN to select the most similar layers (which is not necessarily the last ones) and then align them.

Evaluation setting and the baselines

We evaluate model performance on zero-shot classification and cross-modal retrieval tasks. For zero-shot classification, we consider 22 datasets from the CLIP benchmark. For cross-modal retrieval, we consider Flickr30k and MS COCO test splits to evaluate both text-to-image and image-to-text performance.

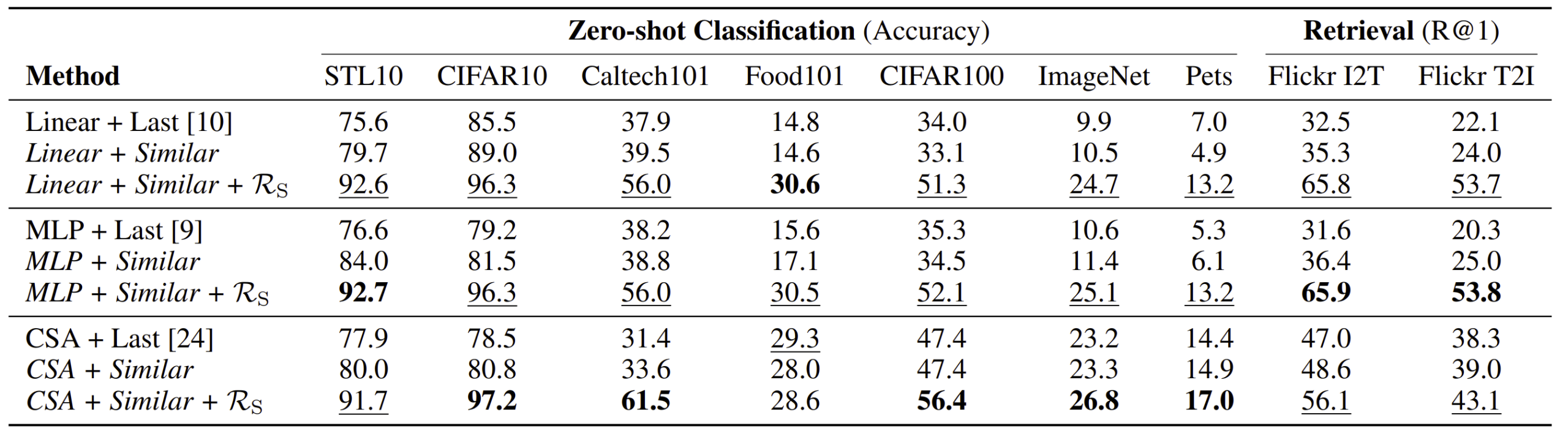

Overall results

We evaluate STRUCTURE across 24 datasets for zero-shot classification and cross-modal retrieval, demonstrating substantial performance gains with minimal paired data. STRUCTURE consistently improves generalization across alignment methods—yielding up to 74% and 137% relative improvements in classification and retrieval, respectively. Selecting layers based on representational similarity further boosts performance, and combining both strategies delivers the best results. Notably, on CIFAR10, our approach outperforms CLIP using just 0.02% of its training data, showcasing STRUCTURE’s effectiveness in low-data regimes.

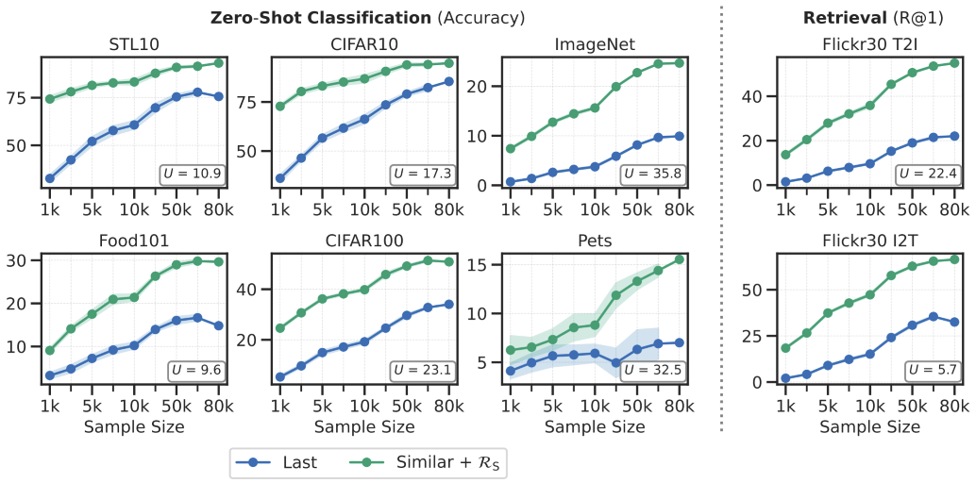

Scale down the training data

STRUCTURE remains highly effective even with extremely limited data, achieving strong performance with as few as 1,000 paired samples. When combined with similarity-based layer selection, it consistently outperforms standard alignment methods. The label efficiency measured by the utility metric shows that STRUCTURE achieves over 20× efficiency on tasks like CIFAR100 classification and Flickr30 retrieval. These results highlight STRUCTURE’s ability to generalize well under severe data constraints, making it particularly valuable in low-resource settings.

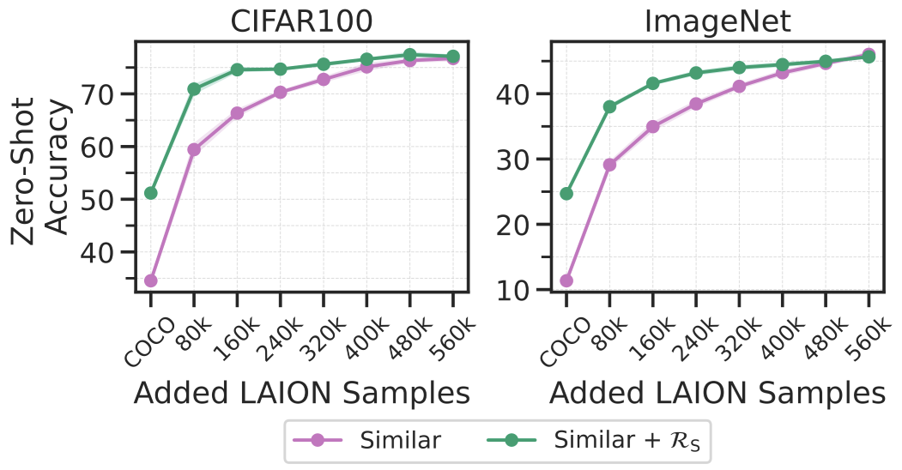

Scale up the training data

As more paired samples from the dataset LAION are added to the 80K COCO training set, STRUCTURE continues to deliver improved performance. The steepest performance gain occurs with the initial addition of 80K samples, while further increases yield smaller but steady improvements. Notably, the impact of STRUCTURE regularization diminishes as more data becomes available, highlighting its particular effectiveness in low-data regimes, where preserving pretrained structure is most beneficial.

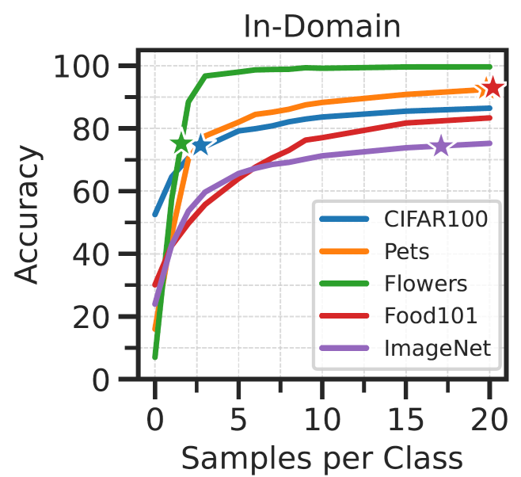

Train-test data distribution shift

Despite the advantages of our alignment approach in low-data regimes, performance remains low on certain datasets. We attribute this to distribution shifts between the COCO-based training set and the target evaluation domains, including differences in image content, label granularity, and overall dataset coverage and scale. Here we show that including a small number of in-domain samples in the training set can significantly improve the performance.

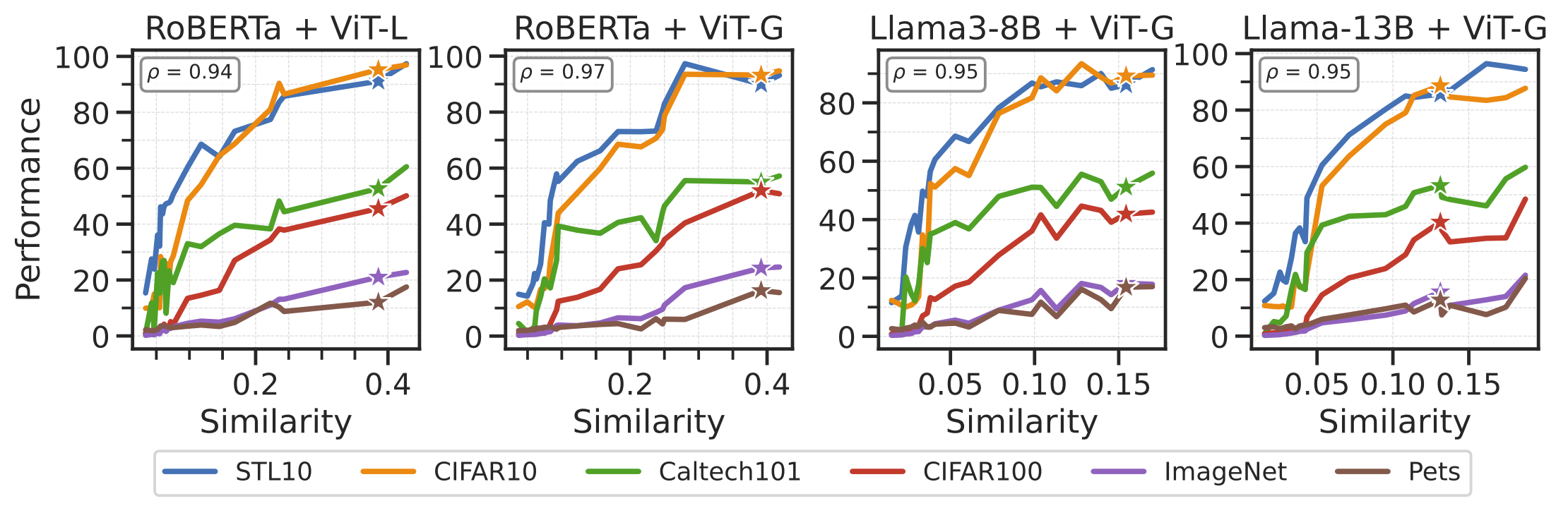

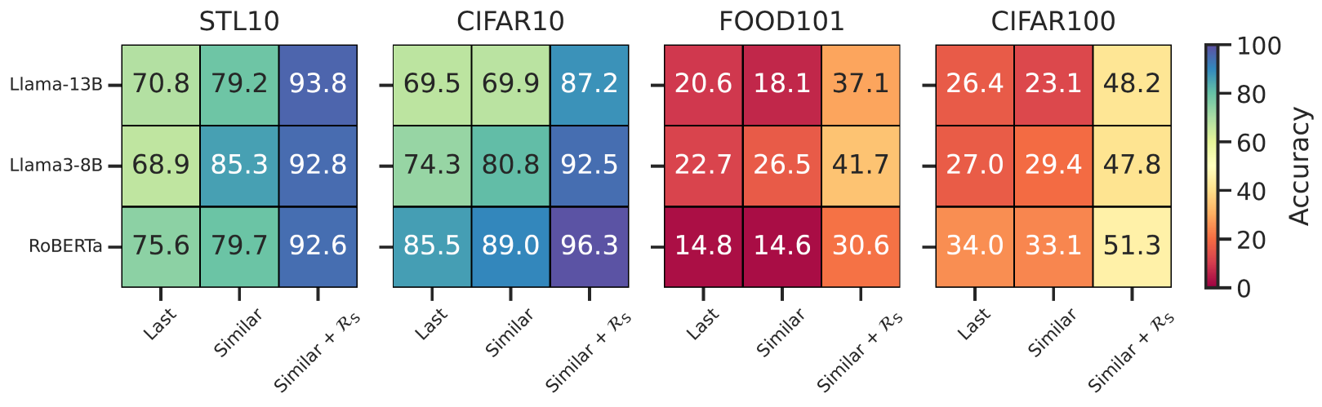

Our approach works across different pairs of models

We explore the performance across different language models, each combined with a DINOv2 ViT-G encoder. The Figure below shows that our regularization term and layer selection strategy consistently improve results across all combinations of models.

Neighborhood preservation

To verify that STRUCTURE preserves the pretrained neighborhood structure, we evaluated alignment consistency during training. Results show that, unlike standard methods which degrade over time, STRUCTURE maintains stable and geometry-respecting alignments, demonstrating its ability to prevent overfitting and preserve meaningful relationships in the embedding space.

Code

A PyTorch implementation of STRUCTURE is available on GitHub.

Contributors

The following people contributed to this work: