Fine-grained Classes and How to Find Them

FALCON is a method that discovers fine-grained classes in coarsely labeled data without any supervision at the fine-grained level. The key insight behind FALCON is to simultaneously learn the relations between coarse and fine-grained classes and to discover the fine-grained classes given the estimated relations.

In many practical applications, coarse-grained labels are readily available compared to fine-grained labels that reflect subtle differences between classes. However, existing methods cannot leverage coarse labels to infer fine-grained labels in an unsupervised manner. To bridge this gap, we propose FALCON, a method that discovers fine-grained classes from coarsely labeled data without any supervision at the fine-grained level. FALCON simultaneously infers unknown fine-grained classes and the underlying relationships between coarse and fine-grained classes. Moreover, FALCON is a modular method that can effectively learn from multiple datasets labeled with different strategies. We evaluate FALCON on eight image classification tasks and a single-cell classification task. FALCON outperforms baselines by a large margin, achieving 22% improvement over the best baseline on the tieredImageNet dataset with over 600 fine-grained classes.

Publication

Fine-grained Classes and How to Find Them

Matej Grcić*, Artyom Gadetsky*, Maria Brbić.

International Conference on Machine Learning (ICML), 2024.

@inproceedings{grcic24fine,

title={Fine-grained Classes and How to Find Them},

author={Grci’c, Matej and Gadetsky, Artyom and Brbi’c, Maria},

booktitle={International Conference on Machine Learning},

year={2024},

}

Coarse labels can provide supervision to infer fine-grained labels and the relationships between coarse and fine-grained classes

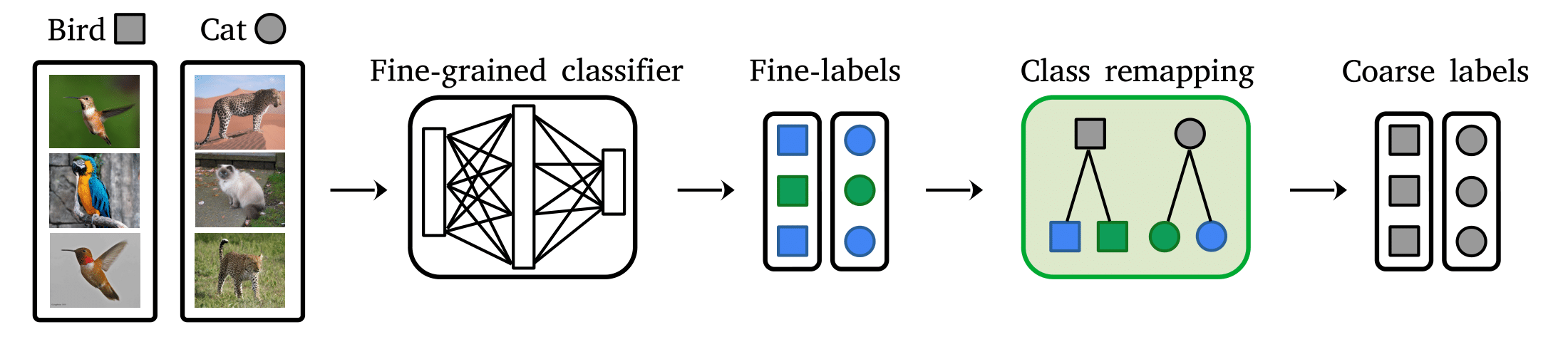

In our work, we present FALCON, a method to infer fine-grained labels given solely coarse labels. The key observation behind FALCON is that a coarse labeling procedure can be seen as labeling a data sample at fine-grained level followed by remapping the predicted class to the coarse level. Given that ground truth coarse labels are available, FALCON employs coarse supervision to train both the fine-grained classifier and the mapping between fine-grained and coarse classes.

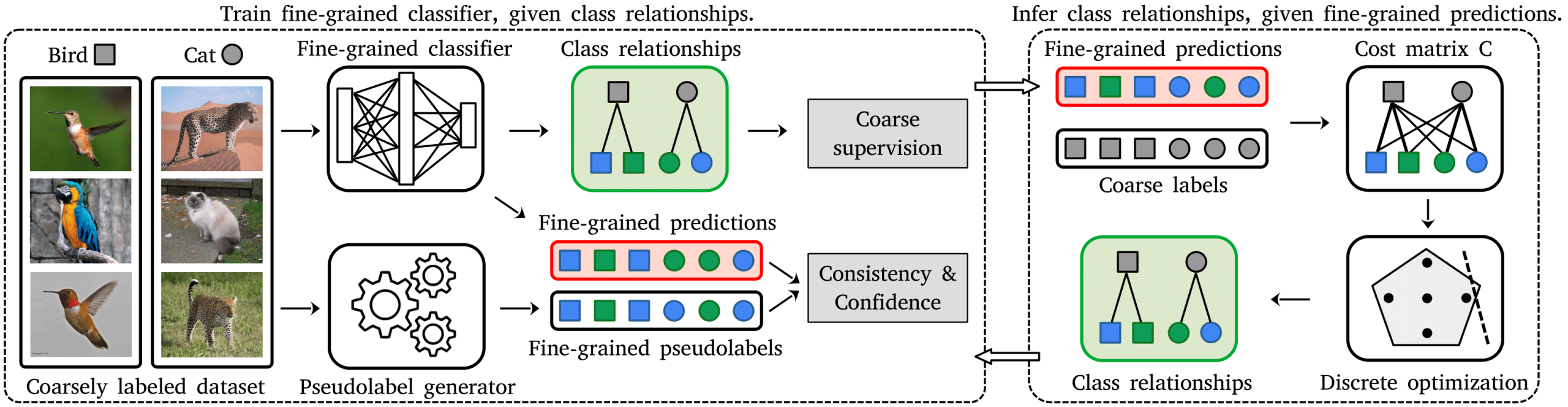

Training FALCON

FALCON effectively learns the fine-grained classifier and the class relations using an efficient alternating optimization procedure. Given current estimate of relations between coarse and fine-grained classes, the fine-grained classifier is updated to minimize the discrepancy between the predicted and ground truth coarse labels. In addition to coarse supervision, we further introduce two regularization techniques to facilitate learning the fine-grained classifier: (i) consistency regularization enforces the fine-grained classifier to output consistent predictions for a sample and its neighbours; and (ii) confidence regularization promotes confident assignments of a sample to fine-grained classes. Given the updated fine-grained classifier, we update the current estimate of class relations by solving the traditional matching problem with the cost matrix that effectively encodes the strength of connections between coarse and fine classes. This alternating optimization procedure is repeated until convergence, yielding both the fine-grained labels and the inferred relations between the fine-grained and coarse labels.

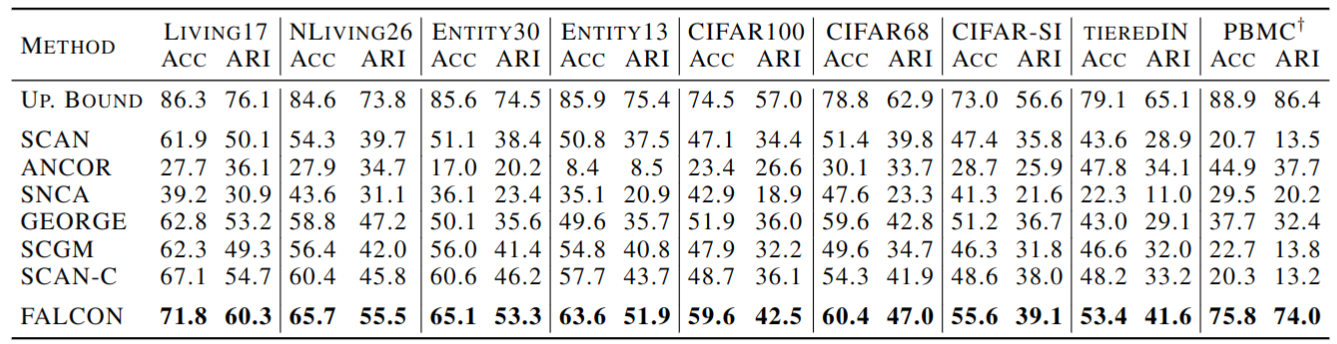

FALCON achieves state-of-the-art in fine-grained class discovery

We compare FALCON to alternative baselines on eight image classification datasets and a single-cell dataset. The results indicate that FALCON outperforms baselines by a large margin on both image and single-cell data. For example, on the BREEDS benchmark of four datasets (Living17, Nonliving26, Entity30, Entity13) FALCON achieves an average improvement of 9% in terms of accuracy and 16% in terms of ARI over the best baselines. On the tieredImageNet dataset with 608 fine-grained classes grouped into 34 coarse classes, FALCON outperforms the best baseline ANCOR by 12% and 22% in terms of accuracy and ARI, respectively. Moreover, improvements of FALCON can be observed on both balanced (BREEDS benchmark and CIFAR100), as well as unbalanced datasets (CIFAR68, CIFAR-SI, tieredImageNet and single-cell PBMC).

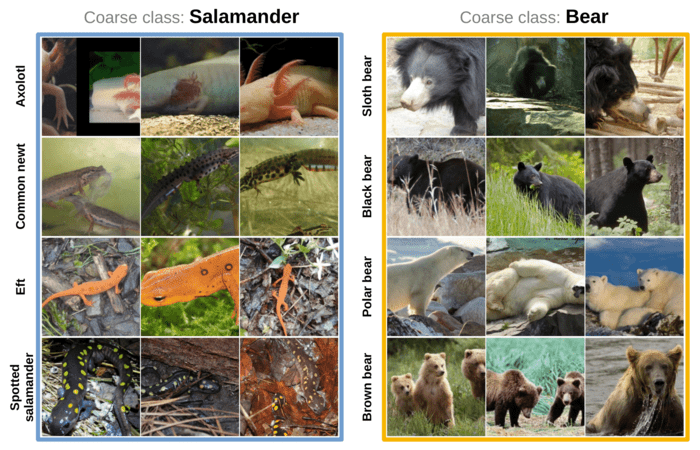

FALCON discovers fine-grained classes that correspond to subspecies

We visualize the three most confident predictions for every fine-grained class associated with coarse classes Salamander and Bear from the Living17 dataset. We validate the recovered fine-grained classes and confirm that they indeed correspond to different salamander subspecies (Axolotl, Common newt, Eft, and Spotted salamander) and bear subspecies (Sloth bear, Black bear, Polar bear, and Brown bear). This indicates that FALCON produces meaningful fine-grained classes even when differences between these subclasses are very subtle.

FALCON accurately infers coarse to fine class relationships

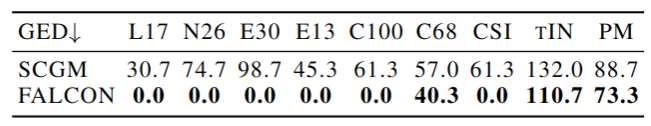

We evaluate how well FALCON infers coarse to fine class relationships by measuring the graph edit distance (GED) to the ground truth relationships. The graph edit distance of zero indicates that the two graphs are the same and the ground truth relationships are perfectly inferred. We compare FALCON with SCGM which learns class relations implicitly through a data generation process. The results show that FALCON can perfectly match the ground truth relations for all balanced datasets which is not the case for the SCGM. For the imbalanced datasets, not all class relationships are correctly recovered but FALCON still substantially outperforms SCGM on all such datasets.

FALCON can learn from multiple datasets with inconsistent coarse labels

Fine-grained classes can be grouped into coarse classes in different ways. For example, one can group animals according to diet (carnivores vs omnivores), size (small vs large), or biological taxonomy (Canis lupus vs Canis familiarise). Thus, datasets often have different labels despite aggregating the instances of the same fine classes.

FALCON is seamlessly applicable to training on multiple datasets with different coarse labels. Indeed, FALCON disentangles training the fine-grained classifier and the mapping between coarse and fine-grained classes. Thus, extending FALCON to learn from multiple datasets with shared fine-grained but varying coarse taxonomies simply requires introducing taxonomy specific class mappings between the fine-grained classes and taxonomy specific coarse classes. Taxonomy specific class remapping will map the shared fine-grained class to the dataset specific coarse taxonomy to make use of available coarse supervision, resulting in simple yet effective approach to learn from multiple datasets.

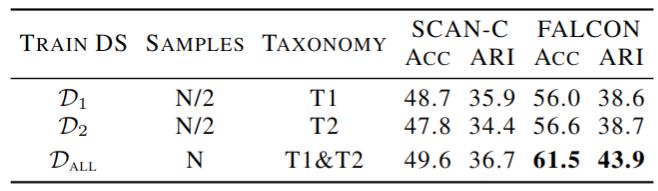

We evaluate the ability of FALCON to learn from multiple datasets by adapting the CIFAR100 dataset. The CIFAR100 dataset has a default grouping of 100 fine classes into 20 coarse classes which we denote with T1. We construct a meaningful alternative grouping of fine classes into coarse classes, which we denote as T2. We split the training set into two halves and label the first half according to T1 and the second half according to T2. Thus, the resulting splits, denoted with D1 and D2, correspond to two datasets with the same underlying set of fine-grained classes and different coarse classes. We keep the CIFAR100 test set unmodified to track the generalization performance for different training configurations. The results indicate that FALCON trained using both available taxonomies achieves 14% relative improvement according to ARI and 10% relative improvement according to clustering accuracy compared to FALCON trained on a single dataset. These results indicate that FALCON, unlike the considered baselines, can effectively utilize different labeling policies to improve performance.

Code

A PyTorch implementation of FALCON is available on GitHub.

Checkpoints

| File | Description |

| trained_models.zip | Trained FALCON models on all the considered datasets |

Contributors

The following people contributed to this work: