Large (Vision) Language Models are Unsupervised In-Context Learners

Joint inference is a framework for large (vision) language models to perform unsupervised adaptation on a given task, resulting in the improved performance upon independent zero-shot predictions. Our framework is compatible with any existing large (vision) language model and, remarkably, despite being fully unsupervised, it often performs on par with supervised approaches that rely on ground truth labels.

Recent advances in large language and vision-language models have enabled zero-shot inference, allowing models to solve new tasks without task-specific training. Various adaptation techniques such as prompt engineering, In-Context Learning (ICL), and supervised fine-tuning can further enhance the model’s performance on a downstream task, but they require substantial manual effort to construct effective prompts or labeled examples. In this work, we introduce a joint inference framework for fully unsupervised adaptation, eliminating the need for manual prompt engineering and labeled examples. Unlike zero-shot inference, which makes independent predictions, the joint inference makes predictions simultaneously for all inputs in a given task. Since direct joint inference involves computationally expensive optimization, we develop efficient approximation techniques, leading to two unsupervised adaptation methods: unsupervised fine-tuning and unsupervised ICL.

Publication

Large (Vision) Language Models are Unsupervised In-Context Learners

Artyom Gadetsky*, Andrei Atanov*, Yulun Jiang*, Zhitong Gao, Ghazal Hosseini Mighan, Amir Zamir, Maria Brbić.

International Conference on Learning Representations (ICLR), 2025.

@inproceedings{gadetsky2025large,

title={Large (Vision) Language Models are Unsupervised In-Context Learners},

author={Artyom Gadetsky and Andrei Atanov and Yulun Jiang and Zhitong Gao and Ghazal Hosseini Mighan and Amir Zamir and Maria Brbic},

booktitle={International Conference on Learning Representations},

year={2025},

}

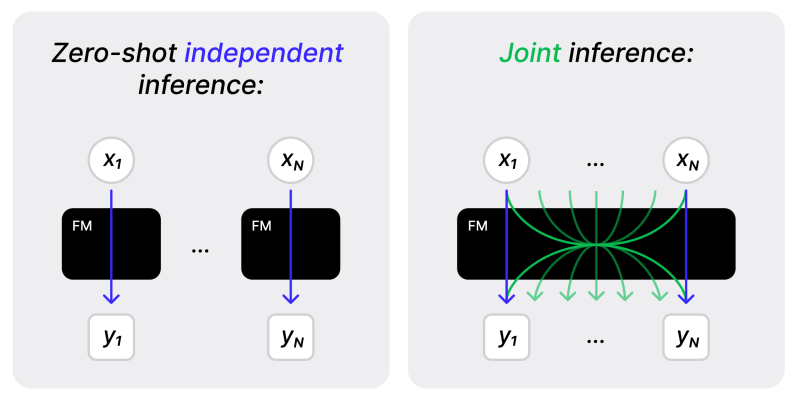

Key idea: making joint predictions for multiple inputs

Unlike the standard zero-shot inference that makes a prediction y independently for each input x, the joint inference makes predictions for multiple inputs at the same time. Such joint predictions leverage inter-sample dependencies to reason over multiple examples simultaneously and guide the model to make consistent predictions. Performing joint inference requires solving the optimization problem that is intractable for a large number of examples N. To address this, we develop approximation techniques, resulting in two unsupervised adaptation methods: unsupervised fine-tuning and unsupervised ICL.

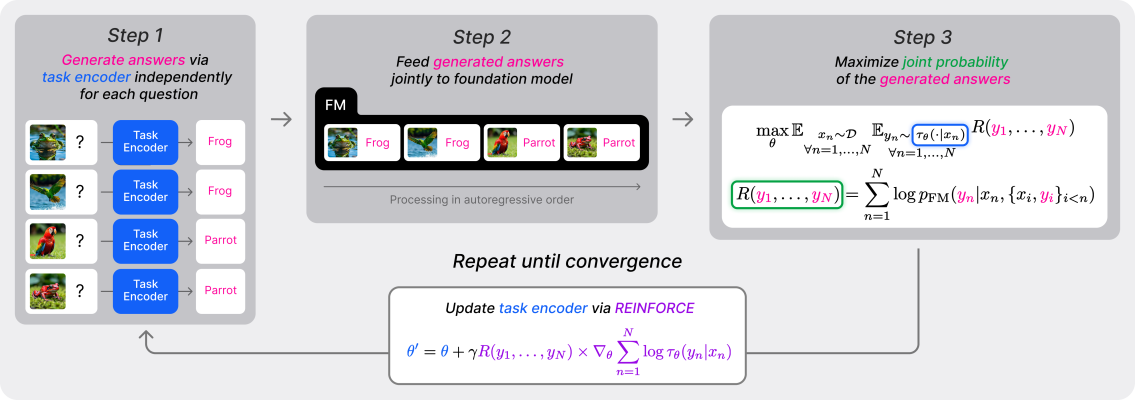

Unsupervised fine-tuning

Unsupervised fine-tuning is a principled optimization method to perform joint inference, enabling unsupervised adaptation on a new task. Given a dataset of questions, each iteration of the optimization involves generating answers via task encoder independently for a batch of questions (Step 1). Subsequently, these answers are fed into a foundation model to estimate the joint probability, providing the quantitative measure of the quality of the answers (Step 2). Finally, task encoder is updated to maximize the joint probability (Step 3). These steps are repeated until convergence, yielding the task encoder adapted on a given task without any supervision. We employ a foundation model itself to serve as our task encoder and use LoRA for parameter-efficient training. Noteworthy, this parametrization, coupled with our joint inference objective can be seen as an instantiation of self-training, in which a model improves by obtaining feedback from itself.

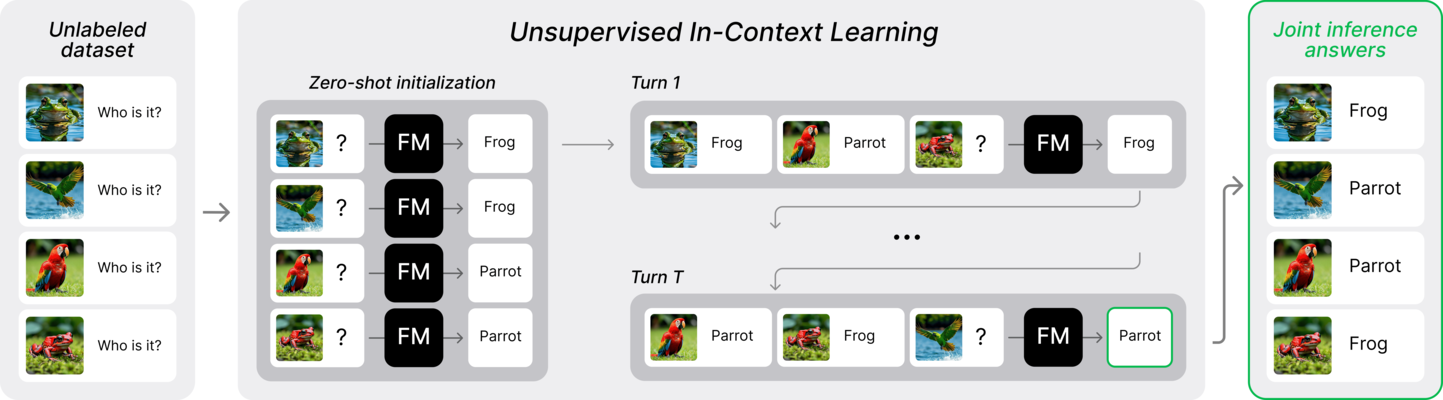

Unsupervised ICL

Although unsupervised fine-tuning offers a principled approach, it requires access to model weights to define a task encoder and output probabilities to compute the optimization objective. This makes it suitable to open-weight models, but limits its applicability to most close-weight models, such as GPT-4. To make the joint inference framework broadly applicable, we propose unsupervised ICL. Unsupervised ICL can perform joint inference for any task and any existing foundation model. Our method generates answers for each question independently using zero-shot prompting. Subsequently, it enters the multi-turn stage, where, at each turn, for each question, the model is prompted with randomly sampled in-context examples from the dataset (excluding the considered question) with the corresponding answers from the previous turn. These examples are fed into the model in the left-to-right order along with the current question to generate a refined answer. Such refinement is repeated for T turns, yielding the final answers.

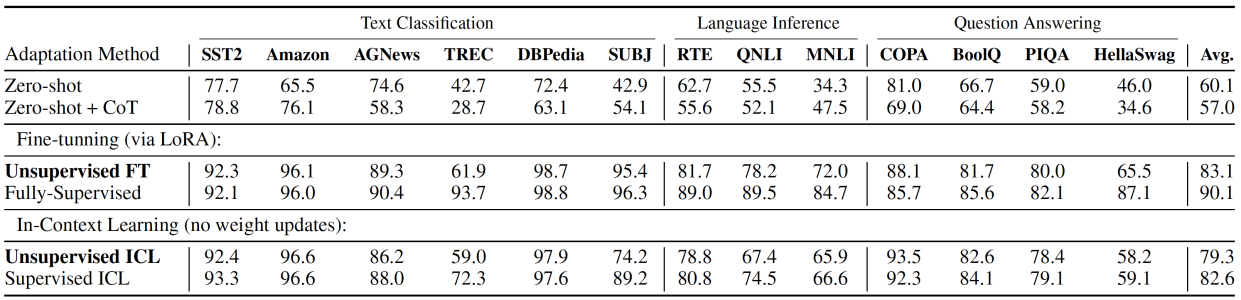

Evaluation setting and the baselines

We evaluate the performance of our two methods for joint inference across a wide range of tasks, including text classification, image classification, question answering, visual question answering, natural language inference, common-sense reasoning, and math problem-solving. We use accuracy as the evaluation metric for all the experiments.

We incorporate the following baselines and upper bounds for our evaluations: (1) Zero-shot inference makes the predictions independently for each input example without task-specific fine-tuning or demonstrations; (2) Zero-shot with Chain-of-Thought (CoT) incorporates CoT reasoning prompts to generate intermediate reasoning steps before the final answer; (3) Supervised ICL uses labeled training examples to provide them as demonstrations to the model. Consequently, this serves as an upper bound to our unsupervised ICL method, which does not use any labeled data. Similarly, (4) Fully-Supervised Fine-tuning (FT) employs LoRA supervised fine-tuning using all labeled training examples and serves as an upper bound to our unsupervised fine-tuning method.

Results on natural language processing tasks

To study the performance of the joint inference framework on language tasks, we evaluate our methods on 13 benchmark datasets, spanning various NLP tasks. We use the Llama-3.1-8B model for our methods and all the baselines. Our results highlight the effectiveness of our joint inference framework. First, the results show that unsupervised fine-tuning substantially outperforms the standard zero-shot inference. In particular, it brings 23% absolute improvement on average over 13 considered datasets, with remarkable 52.5% 30.6%, 26.3% and 19.5% on the SUBJ, Amazon, DBPedia and HellaSwag datasets, respectively. Furthermore, it often approaches the performance of its fully supervised counterpart, closely matching it on 6 out of 13 considered datasets. Secondly, unsupervised ICL also exhibits remarkable performance gains compared to the zero-shot inference, bringing 19.2% absolute improvement on average over 13 considered datasets. Remarkably, it is on par with the supervised ICL on 10 out of 13 considered datasets, overall demonstrating the effectiveness of the proposed joint inference framework.

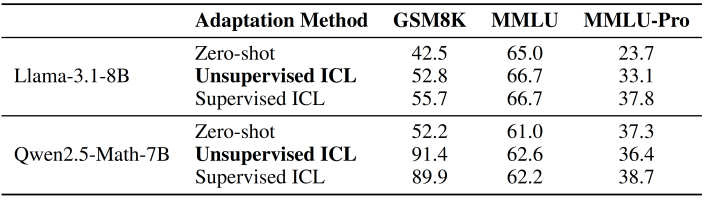

Results on mathematical reasoning and multitask language understanding

Furthermore, we evaluate unsupervised ICL on the GSM8K dataset that requires reasoning capabilities and on the MMLU(-Pro) dataset that covers broad knowledge of different disciplines. We employ Chain-of-Thought for our method and all the baselines on the GSM8K and MMLU-Pro datasets. Consequently, unsupervised ICL refines both reasoning chains and the answers in a fully unsupervised manner. Our results indicate that our method is also applicable to these challenging benchmarks. For instance, it brings remarkable 39.2% absolute improvement over the zero-shot baseline on the GSM8K dataset, also outperforming the supervised counterpart.

Closed-weight GPT-4o on image classification and visual multiple choice question answering

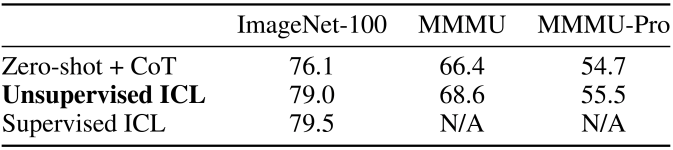

To demonstrate the applicability of the joint inference framework to closed-weight models, we employ GPT-4o and study the performance of unsupervised ICL on a subset of ImageNet, MMMU and MMMU-Pro datasets. For ImageNet, we construct a support set containing 1000 images corresponding to 100 classes and we sample 5000 images for the evaluation purposes only. Specifically, to assess the generalization, we refine the support set with our unsupervised ICL for two rounds, and, then, examine the performance on the evaluation set conditioned on the refined support set. For MMMU and MMMU-Pro, we directly run methods on the validation split, since no data are available to construct support sets. Consequently, supervised ICL is not available for MMMU and MMMU-Pro. As before, we compare our unsupervised ICL to the zero-shot inference, and to the suppervised ICL on ImageNet, employing ground truth labels for the support set. Table 4 illustrates that unsupervised ICL brings an improvement of 3%, 2% and 1% compared to zero-shot inference with Chain-of-Thought prompting on the ImageNet, MMMU and MMMU-Pro datasets, respectively. Furthermore, unsupervised ICL approaches supervised ICL on the ImageNet dataset, overall demonstrating that our joint inference framework is also applicable to closed-weight models.

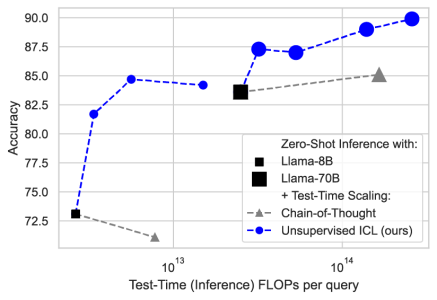

Unsupervised ICL scales effectively at test time

Our unsupervised ICL method consists of two stages: (i) a task adaptation stage, where we iteratively generate labels for unlabeled examples, creating a labeled support set; and (ii) a test stage, where we perform ICL inference on new test examples using the labeled support set from stage one. Since the adaptation stage is performed once per task, test-time compute scaling depends on the number of (unsupervised) ICL examples used in the second test stage. Figure below shows how performance improves as we scale test-time compute by increasing the number of ICL examples. We report performance on the RTE dataset with Llama-3.1 models. We find that test-time scaling of the 8B model with our method achieves a better compute-performance trade-off than zero-shot inference with the larger 70B model. Further scaling of the 70B model leads to additional gains, outperforming CoT in both compute efficiency and performance.

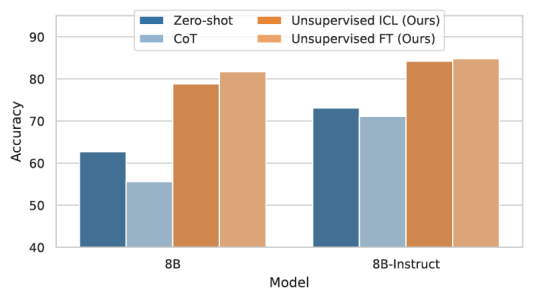

Unsupervised ICL improves non-instruction-tuned models

Similarly to the previous experiment, Figure below shows the impact of instruction tuning on the performance of different inference methods on the RTE dataset. We find that unsupervised ICL and FT methods with the base model perform better than both zero-shot and CoT with the instruction-tuned model, suggesting less reliance on supervised fine-tuning data.

Code

A PyTorch implementation of the joint inference framework is available on GitHub.

Contributors

The following people contributed to this work: