Weak-to-Strong Generalization under Distribution Shifts

RAVEN is a framework for robust weak-to-strong generalization that enables a strong model to learn from an ensemble of weak supervisors, even under significant distribution shifts between the weak supervisors’ training data and the strong model’s fine-tuning data. By adaptively learning to weight each supervisor, RAVEN overcomes the performance degradation seen in existing methods, achieving substantial gains across out-of-distribution vision, language, and alignment tasks.

As AI systems become increasingly complex and even superhuman, accurately supervising their behavior may exceed human capabilities. A promising research direction, known as weak-to-strong (W2S) generalization, explores how we can use today’s “weak” supervisors (less capable AI models simulating humans in the future) to teach tomorrow’s “strong” superhuman models. However, we find that this process breaks down under distribution shifts, i.e., when the strong model faces tasks or data that its weak supervisors are unfamiliar with, leading them to provide unreliable (misaligned) training signals (labels). To address this, we propose RAVEN (Robust AdaptiVe wEightiNg), a framework that enables robust weak-to-strong generalization. Instead of relying on a single weak supervisor, RAVEN learns to dynamically combine an ensemble of them, automatically identifying and prioritizing the most trustworthy supervision for the task at hand. Our experiments across image classification, text classification, and preference alignment show that RAVEN outperforms existing methods by over 40% on out-of-distribution (OOD) tasks, while matching or exceeding their performance on in-distribution (InD) tasks.

Publication

Weak-to-Strong Generalization under Distribution Shifts

Myeongho Jeon*, Jan Sobotka*, Suhwan Choi*, Maria Brbić.

@inproceedings{jeon2025weaktostrong,title={Weak-to-Strong Generalization under Distribution Shifts},author={Myeongho Jeon and Jan Sobotka and Suhwan Choi and Maria Brbi\'c},booktitle={The Thirty-ninth Annual Conference on Neural Information Processing Systems},year={2025},url={https://openreview.net/forum?id=fEg31YjLct}}

Weak-to-strong generalization fails under distribution shifts

The standard weak-to-strong (W2S) framework studies how a strong, capable model can be trained using labels provided by a weaker, less capable model. The goal is for the strong model to generalize beyond its weak supervision and approach the performance it would have achieved if trained with ground-truth labels. This provides a powerful paradigm for training future superhuman models.

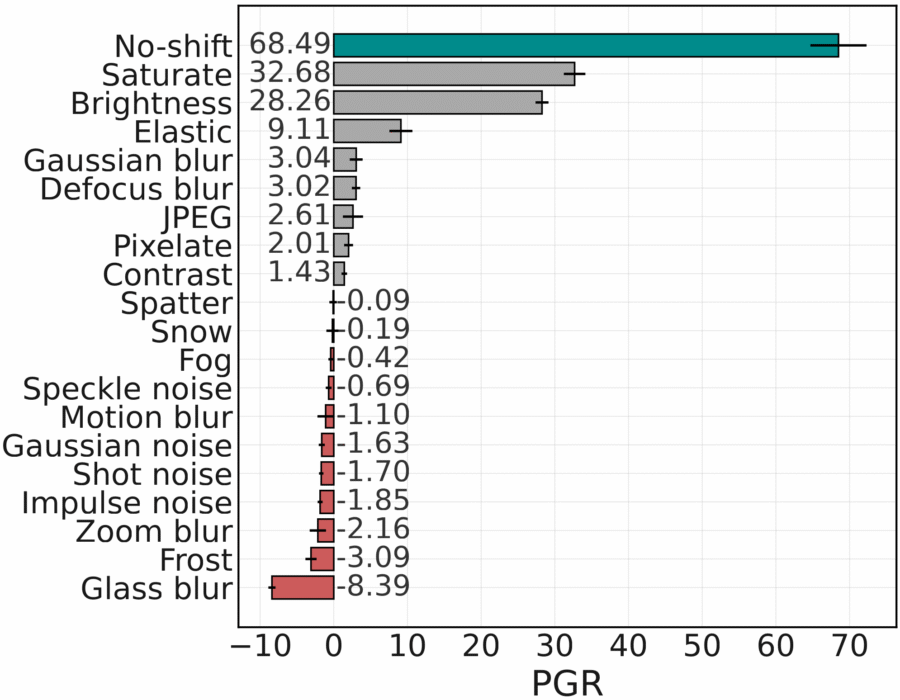

However, a critical assumption is that the weak supervisor is at least familiar with the data it is labeling. What happens when the data is not only complex but also comes from a new, unfamiliar distribution? For example, a radiologist used to one type of imaging data may misinterpret scans from a different machine or mislabel rare diseases outside their usual clinical practice. We simulate such a scenario by creating a distribution shift between the data used to train the weak supervisor and the data it must label for the strong model to fine-tune it. We find that in this setting, naive W2S generalization often fails, with the strong model sometimes performing even worse than its weak supervisor. This can be seen from our motivating experiment on ImageNet-C, where the distribution shift comes from various types of corruption of the images to classify. Below, No-shift corresponds to the standard in-distribution scenario, corruption types refer to out-of-distribution scenario, and performance is measured by the Performance Gap Recovered (PGR; 0 corresponds to the strong model staying at the performance of the weak model, and 100 corresponds to the strong model reaching the performance of a strong model when trained on ground-truth labels).

Our method: RAVEN (Robust AdaptiVe wEightiNg)

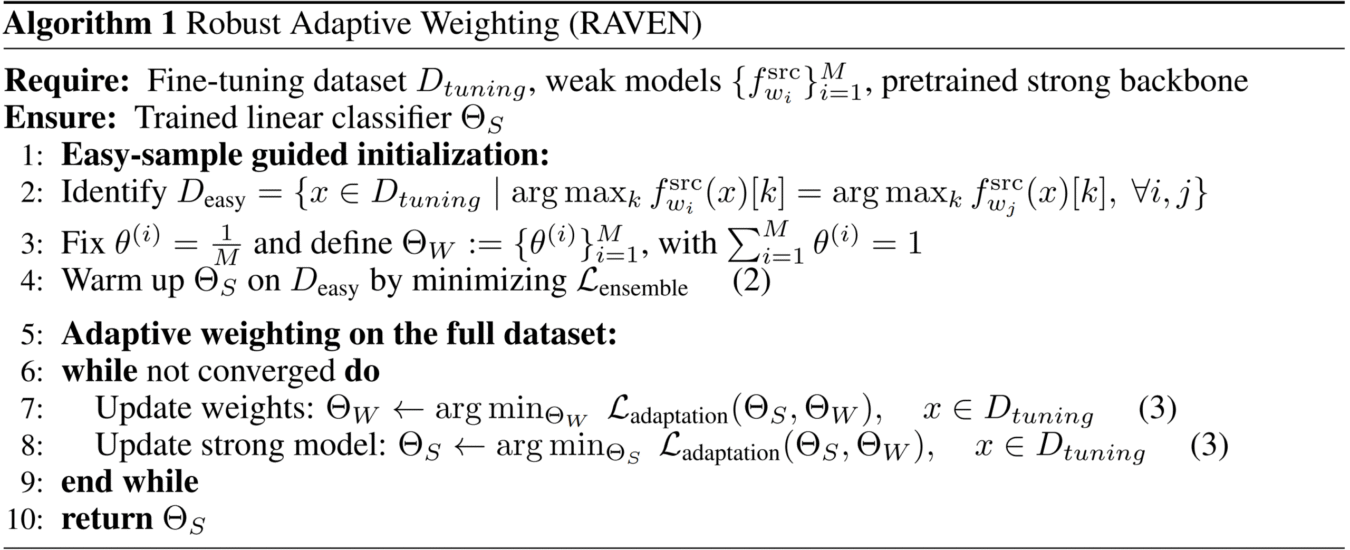

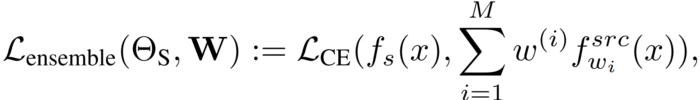

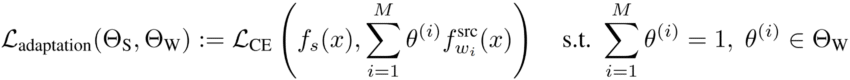

Under a distribution shift, the performance of different weak supervisors can vary dramatically. Some weak models may adapt better to the new out-of-distribution data than others, and we cannot evaluate their individual performances without ground-truth labels, which are assumed to be unavailable. Thus, the key challenge is identifying the more reliable supervisors within the ensemble in an unsupervised manner.

RAVEN addresses this by learning to adaptively weight the outputs from multiple weak models. The supervision weights are treated as trainable parameters and are optimized jointly with the strong model’s parameters. This allows the strong model to learn, over the course of training, which supervisors to trust for the given data distribution. To provide a stable start to this process and enable the strong model’s identification ability, RAVEN also uses easy-sample guided initialization, beginning its training only on samples where all weak models agree, before moving on to the full dataset.

Evaluation settings

We evaluate RAVEN on a diverse set of tasks and datasets designed to test generalization under distribution shifts:

- Image classification: iWildcam, Camelyon17, and fMoW benchmarks, where shifts are caused by changes in camera locations, hospitals, or time.

- Text classification: On Amazon-WILDs, where the shift comes from different product reviewers, and on medical question-answering datasets MedMCQA and MedQA, with shifts between different medical exam systems.

- Preference alignment: We align a language model using weak preference feedback, introducing a shift between “helpfulness” and “harmlessness” domains from the HH-RLHF dataset.

Results on image and text classification

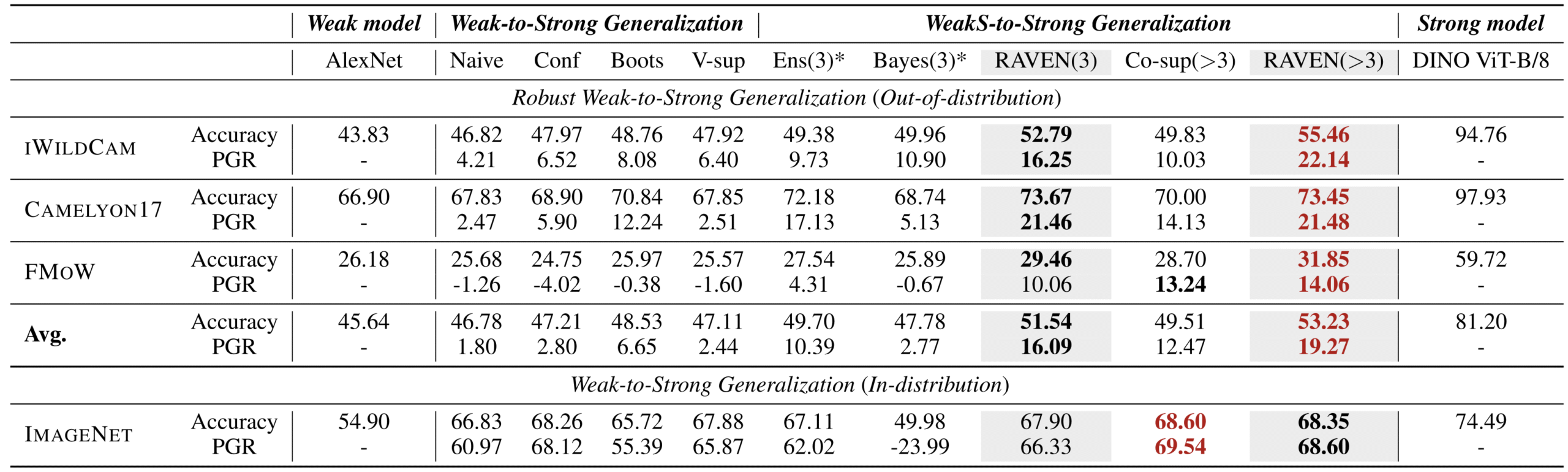

Across all image and text classification tasks, RAVEN consistently outperforms all baselines in the OOD setting. On image classification, RAVEN achieves a 54% average improvement in PGR over the next best method. In the table below, Weak-to-Strong Generalization refers to using a single weak model, whereas WeakS-to-Strong Generalization denotes ensemble-based methods.

Similarly, in text classification, RAVEN shows a 31% average PGR improvement. These results demonstrate that dynamically learning to combine weak supervisors is critical for robustness to distribution shifts. Even on in-distribution tasks, RAVEN remains competitive, often outperforming all other methods.

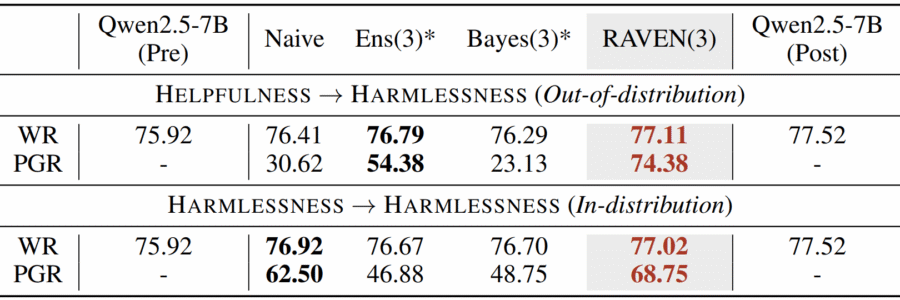

Results on preference alignment

Aligning (strong) large language models with (weak) human preferences is a critical application of W2S generalization. We tested RAVEN’s ability to align a strong model using preference labels from weaker models, where the strong model’s fine-tuning data came from a different domain (Harmlessness) than the weak models’ training data (Helpfulness). RAVEN again achieves the best performance, outperforming the strongest baseline by 40% in PGR and achieving the highest GPT-4o win rate (WR). This highlights RAVEN’s applicability beyond standard classification tasks to the crucial challenge of AI alignment. In the table below, Pre and Post indicate the states before and after alignment with ground-truth labels, respectively.

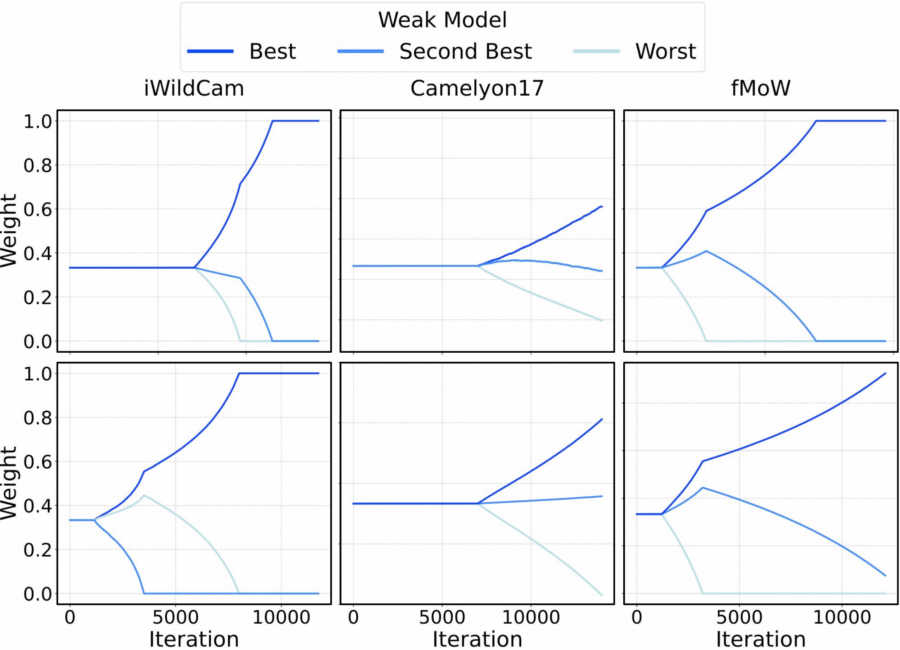

Analysis: RAVEN automatically identifies trustworthy supervision

A remarkable finding is that in most cases, the adaptive weighting in RAVEN ends up identifying the best weak supervisor, as measured in a post-hoc analysis with ground-truth labels. More specifically, we found a strong correlation between the actual OOD performance of a weak model and the ensembling weight that RAVEN assigned to it during strong model training. The plot below shows that across different runs, RAVEN consistently assigns the highest weight to the best-performing weak model. This demonstrates that the strong model can effectively infer the quality of its supervisors and prioritize the best sources of labeling.

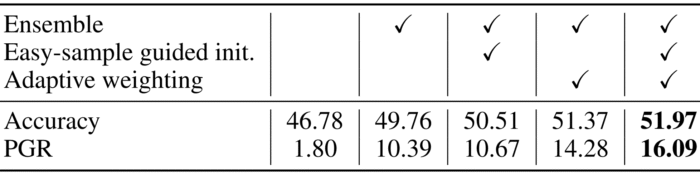

Analysis: Ablation study and scaling with more supervisors

To verify the importance of each component of our framework, we conducted an ablation study. The results confirm that using an ensemble of supervisors, our easy-sample guided initialization, and the adaptive weighting mechanism all contribute significantly to RAVEN’s final performance.

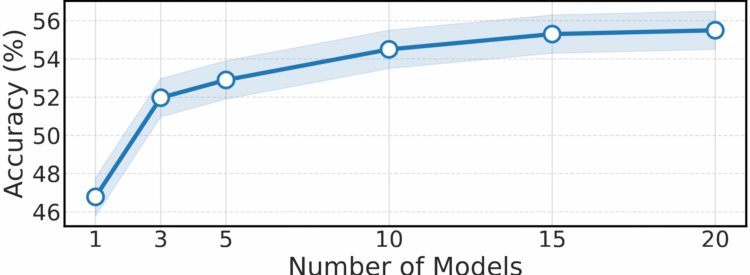

Furthermore, we analyzed how RAVEN’s performance scales with the number of weak supervisors. As shown in the figure below, performance steadily improves as more weak models are added to the ensemble, although the gains begin to saturate. This suggests that leveraging a diverse group of weak supervisors is a robust strategy for improving strong model performance.

Code

A PyTorch implementation of RAVEN is available on GitHub.

Contributors

The following people contributed to this work: