Annotation of Spatially Resolved Single-cell Data with STELLAR

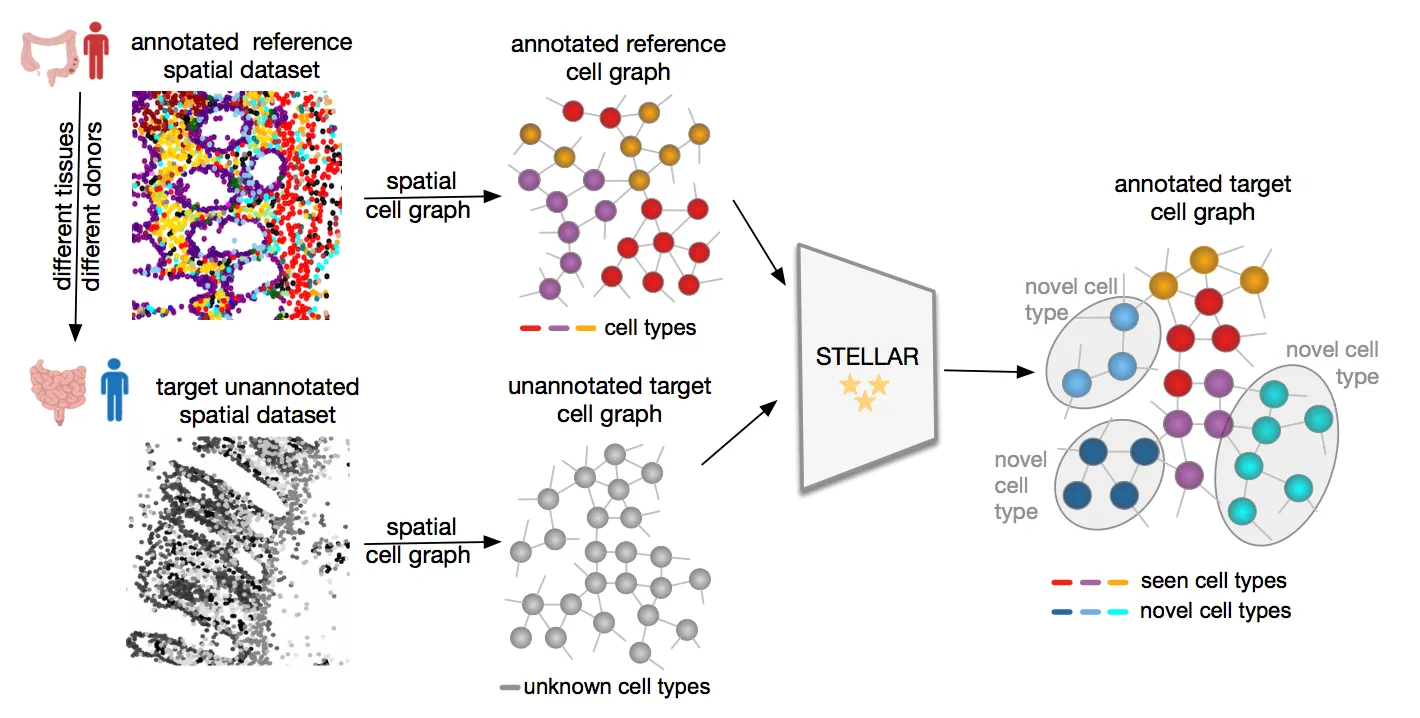

STELLAR is a geometric deep learning method for cell type discovery and identification in spatially resolved single-cell datasets. STELLAR automatically assigns cells to cell types present in the annotated reference dataset as well as discovers novel cell types by transferring annotations across different dissection regions, tissues, and donors.

Development of spatial protein and RNA imaging technologies has opened new opportunities for understanding location-dependent properties of cells and molecules. The power to capture spatial organization of cells within tissue plays an essential role in understanding cellular function and in studying complex intercellular mechanisms. To increase our knowledge of cells in healthy and diseased tissues, computational methods are needed that can assist with robust characterizations of cells in comprehensive spatial datasets and guide our understanding of functional spatial biology.

We develop STELLAR (SpaTial cELl LeARning), a geometric deep learning tool for cell-type discovery and identification in spatially resolved single-cell datasets. Given annotated spatially resolved single-cell dataset with cells labeled according to their cell types (reference dataset), STELLAR learns spatial and molecular signatures that define cell types. Using the reference dataset, STELLAR then transfers the annotations to a completely unannotated spatially resolved single-cell dataset with unknown cell types. The reference and unannotated datasets can belong to different dissection regions, different donors, or different tissue types.

Publication

Annotation of Spatially Resolved Single-cell Data with STELLAR.

Maria Brbić*, Kaidi Cao*, John W. Hickey*, Yuqi Tan, Michael P. Snyder, Garry P. Nolan, Jure Leskovec.

Nature Methoods, 2022.

@article{stellar2022,

title={Annotation of Spatially Resolved Single-cell Data with STELLAR},

author={Brbi\’c, Maria and Cao, Kaidi and Hickey, John W and Tan, Yuqi

and Snyder, Michael and Nolan, Garry P and Leskovec, Jure},

journal={Nature Methods},

year={2022},

}

Overview of STELLAR

STELLAR takes as input (i) a reference dataset of annotated spatially resolved single cell-dataset with cells assigned to cell types, and (ii) a completely unannotated spatially resolved single-cell dataset with cell types that are unknown. STELLAR then assigns unnanotated cells to cell types in the reference dataset, while for cells that do not fit into the reference cell types, it identifies individual novel cell types and assigns cells to them.

STELLAR learns low-dimensional cell representations (embeddings) that reflect molecular features of cells as well as their spatial organization and neighborhood. The cell embeddings are learnt using graph convolutional neural networks. Given cell embeddings, STELLAR has two capabilities: (1) it assigns cells in the unnanotated dataset to one of the cell types previously seen in the reference dataset; and (2), for cells that do not fit the existing known/labeled cell types identifies novel cell types and assigns cells to them.

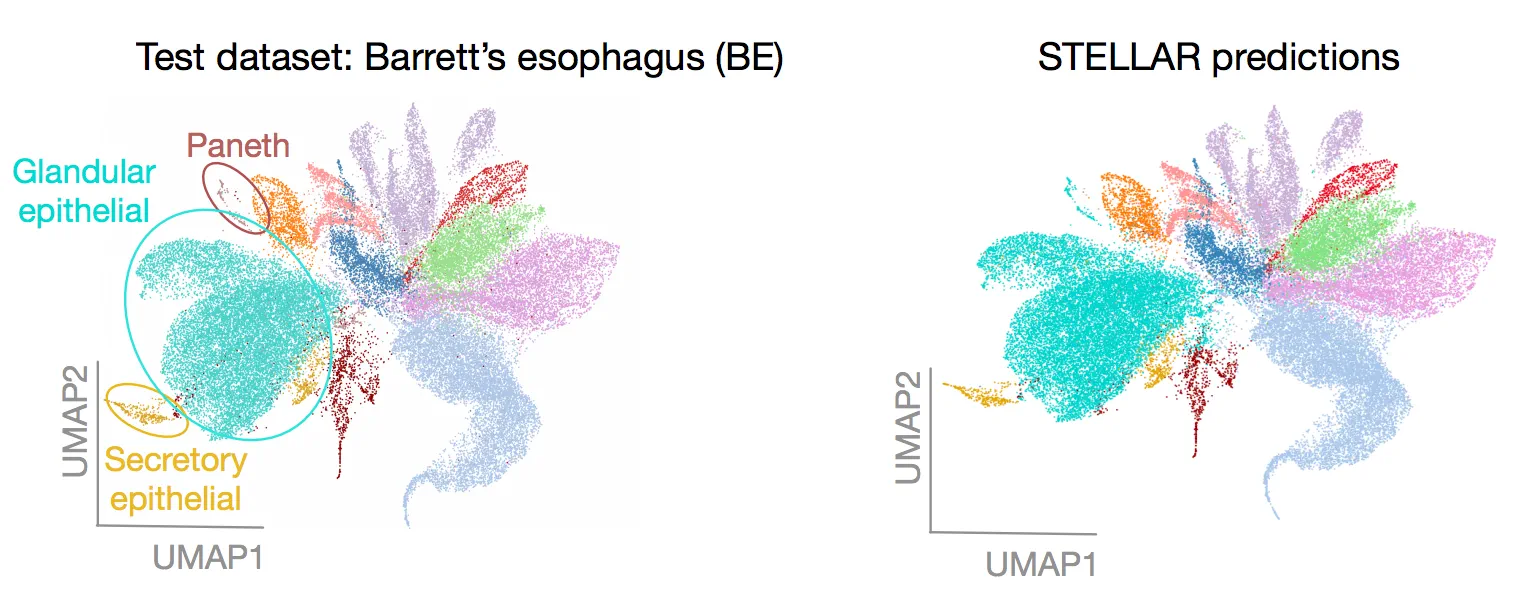

Case study: Barrett’s esophagus CODEX dataset

We applied STELLAR on CODEX multiplexed imaging data. We used data from human tonsil as the reference set and tissue from a patient with Barrett’s esophagus (BE) as the unannotated target tissue. Three subtypes of epithelial cells appear only in the BE dataset, while B cells appear only in tonsil data.

STELLAR accurately assigned cell types to 93% of cells in the BE data, discovering also BE-specific subtypes of epithelial cells as a newly discovered cell type and correctly differentiating between glandular epithelial and secretory epithelial novel cell types (figure below). Overall, STELLAR predictions also show agreement with ground truth annotations and do not reflect issues recognizing cell types in certain areas of the tissue.

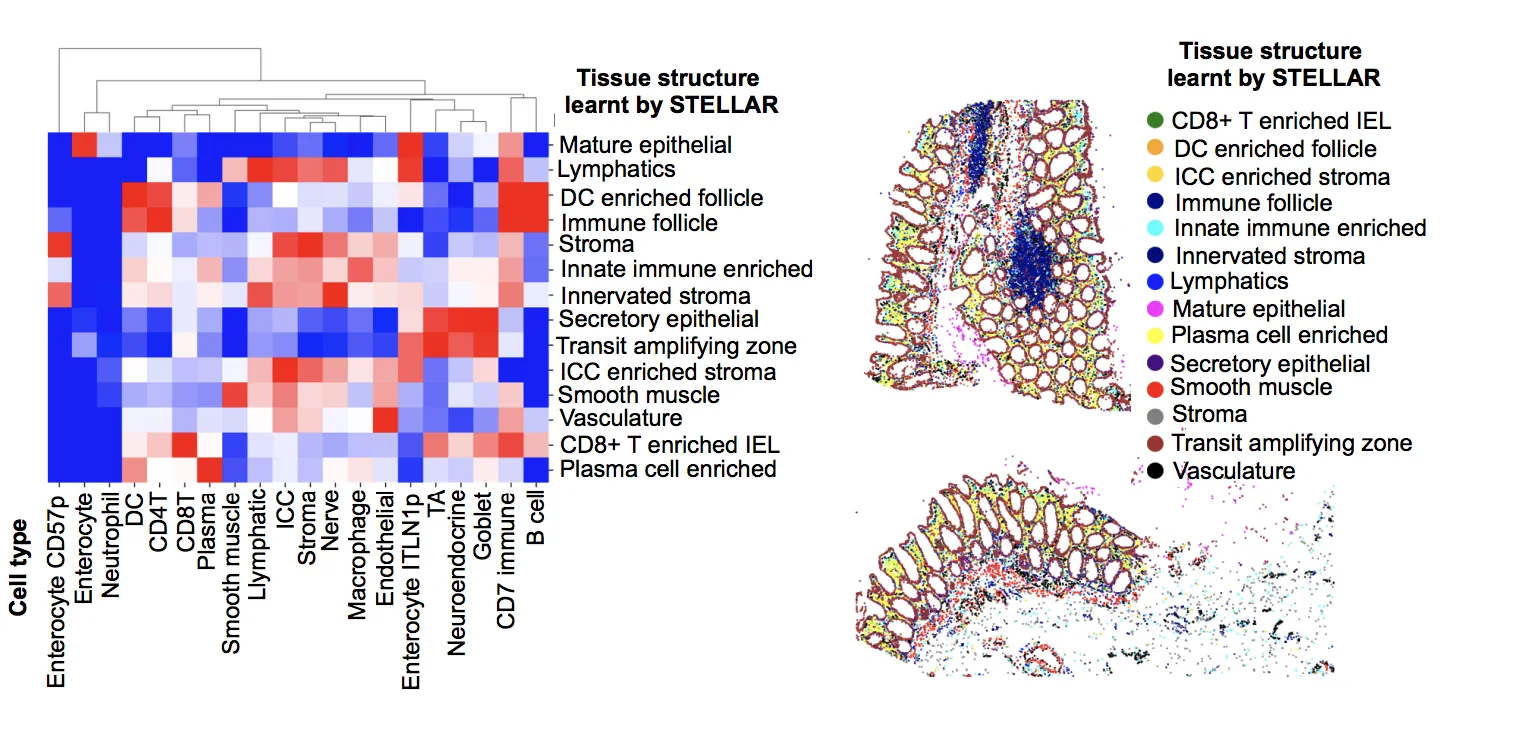

STELLAR annotates HuBMAP datasets

As a part of the HuBMAP consortium, we generated CODEX imaging data for tissues from a healthy intestine. We used our expert-annotated samples from a single donor as training data and applied STELLAR to unannotated samples from other donors. We applied STELLAR to a total of 8 donors, across 64 tissues and 2.6 million cells. STELLAR alleviated expert annotation effort tremendously – human annotations of these images would require approximately 320 hours of manual labor, while with STELLAR it took the expert only 4 hours to annotate the images. We confirmed the quality of STELLAR’s predictions by looking at average marker expression profiles of predicted cell types for the samples from a different donor.

Datasets

We made CODEX multiplexed imaging datasets used in STELLAR available at dryad.

MERFISH dataset are from Zhang et al. Nature ’21

Code

A PyTorch implementation of STELLAR is available on GitHub.

Contributors

The following people contributed to this work:

Maria Brbić*

Kaidi Cao*

John Hickey*

Yuqi Tan

Michael Snyder

Garry Nolan

Jure Leskovec