Let Go of Your Labels with Unsupervised Transfer

TURTLE is a fully unsupervised approach for inferring the underlying human labeling of a downstream dataset given representation spaces of foundation models. TURTLE is compatible with any pre-trained representations and does not require any task-specific representation learning.

Foundation vision-language models have enabled remarkable transferability of the pre-trained representations to a wide range of downstream tasks. However, to solve a new task, current approaches for the downstream transfer still necessitate human guidance to provide labeled examples or to define visual categories that appear in the data. Here, we present TURTLE, a method that enables fully unsupervised transfer from foundation models. The key idea behind our approach is to search for the labeling of a downstream dataset that maximizes the margins of linear classifiers in the space of single or multiple foundation models to uncover the underlying human labeling. Compared to zero-shot and supervised transfer, unsupervised transfer with TURTLE does not need the supervision in any form. Compared to deep clustering methods, TURTLE does not require task-specific representation learning that is expensive for modern foundation models.

Publication

Let Go of Your Labels with Unsupervised Transfer

Artyom Gadetsky*, Yulun Jiang*, Maria Brbić.

International Conference on Machine Learning (ICML), 2024.

@inproceedings{gadetsky2024let,

title={Let Go of Your Labels with Unsupervised Transfer},

author={Gadetsky, Artyom and Jiang, Yulun and Brbi’c, Maria},

booktitle={International Conference on Machine Learning},

year={2024},

}

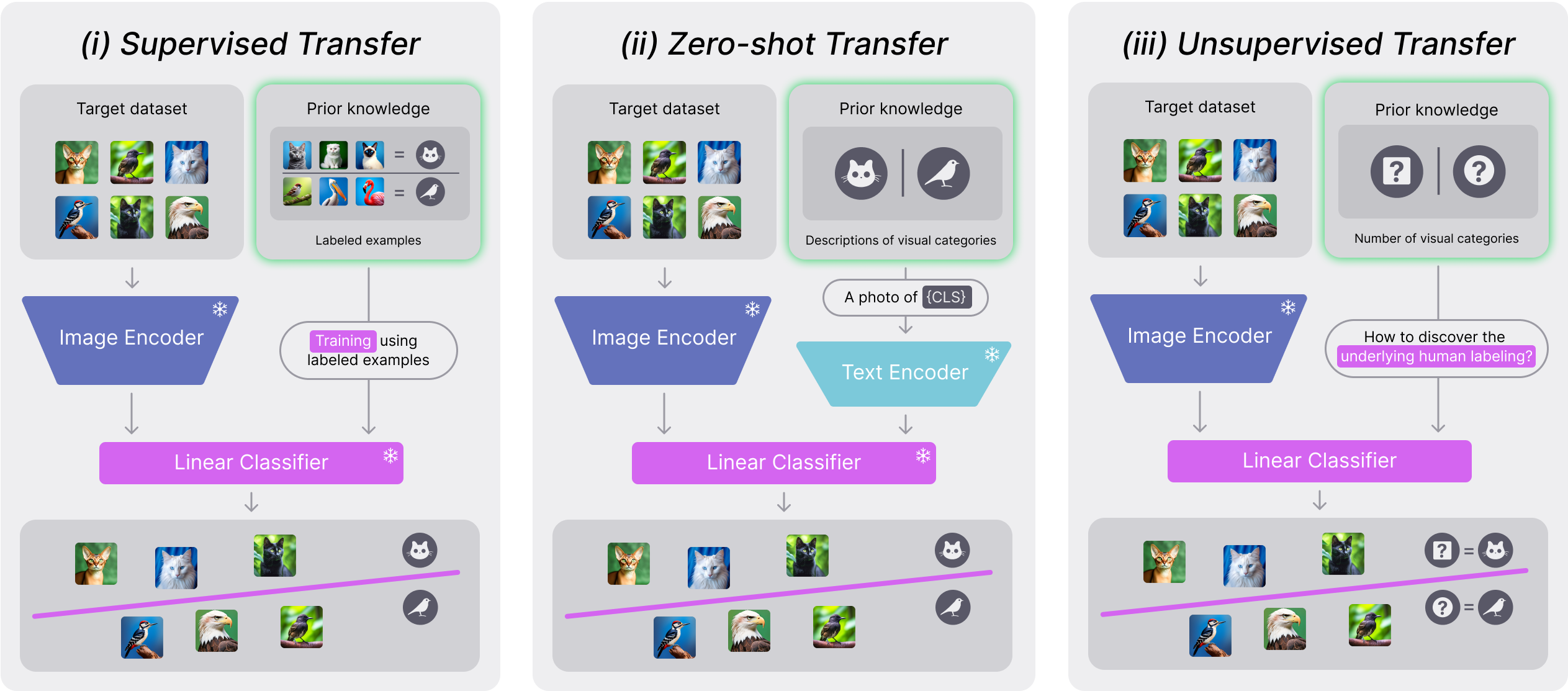

Overview of types of downstream transfer

The question we aim to answer in our work is how to utilize representations from foundation models to solve a new task in a fully unsupervised manner. We introduce the problem setting of unsupervised transfer and highlight the key differences between types of transfer. Specifically, types of downstream transfer differ in the amount of available supervision. Given representation spaces of foundation models, (i) supervised transfer, represented as a linear probe, trains a linear classifier given labeled examples of a downstream dataset; (ii) zero-shot transfer assumes descriptions of the visual categories that appear in a downstream dataset are given, and employs them via text encoder to solve the task; and (iii) unsupervised transfer assumes the least amount of available supervision, i.e., only the number of categories is given, and aims to uncover the underlying human labeling of a dataset.

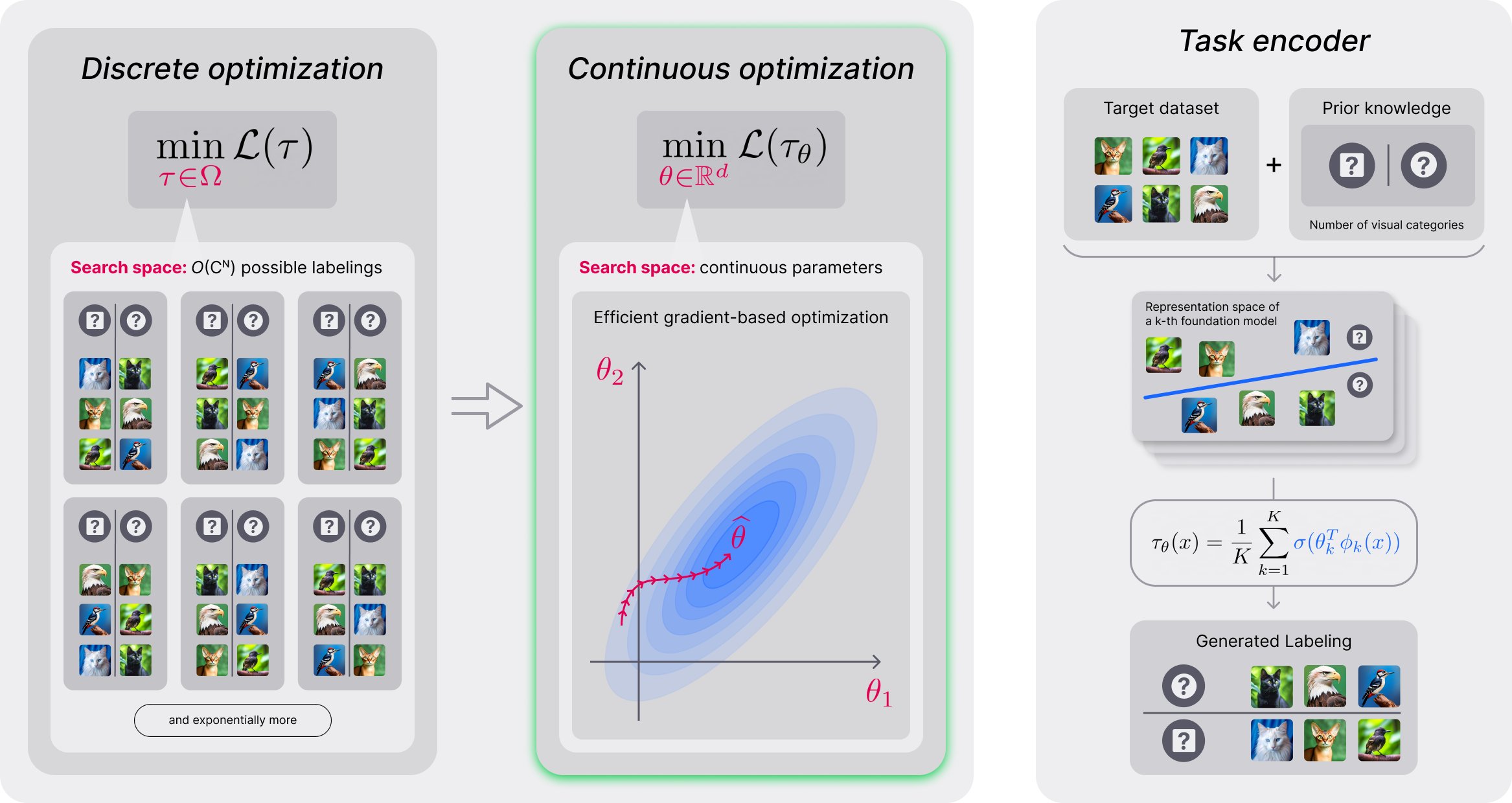

Overview of TURTLE

Task encoder to parametrize a labeling

The problem of unsupervised transfer can be seen as the problem of searching for the underlying human labeling by enumerating all possible labelings of a dataset. However, it would result in the hard discrete optimization problem. To overcome this limitation, we resort to the continuous relaxation, and parametrize a labeling with the task encoder, enabling efficient gradient-based optimization techniques. In TURTLE, we employ pre-trained representations of foundation models to define a task encoder. These representations remain fixed during the overall training procedure, alleviating the need of task-specific representation learning. Specifically, given K representation spaces, our task encoder is a simple ensemble of linear classifiers, i.e., average of outputs of linear classifiers in each representation space.

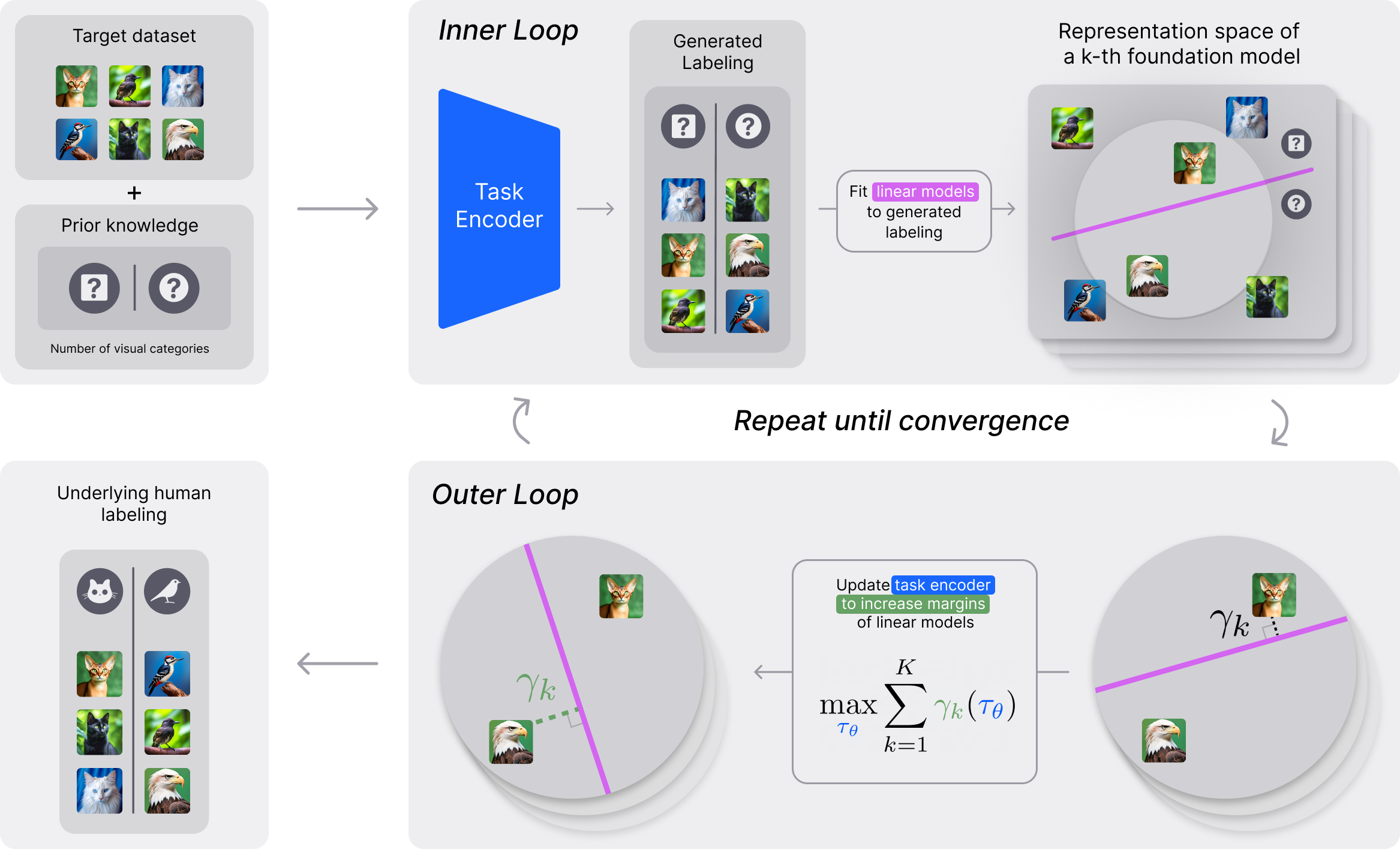

Training TURTLE

TURTLE effectively searches for the underlying human labeling using an efficient bilevel optimization procedure. Given K representation spaces of foundation models, (i) at the inner loop, the task encoder generates the labeling of a dataset and a linear model is trained to fit this labeling in each representation space; (ii) at the outer loop, the task encoder is updated to maximize margin sizes of the trained linear models, resulting in the new labeling that induces linear models with larger margins compared to the current ones. This alternating optimization procedure is repeated until convergence, yielding the labeling that induces linear models with the largest margins over all possible labelings. Recently, we have shown that such large margin labelings are strikingly well correlated with the underlying human labelings (HUME, NeurIPS 2023). Consequently, TURTLE effectively employs maximum margin principle to guide the search to uncover the underlying human labeling of a dataset.

Evaluation setting and the baselines

We study the performance of TURTLE on the extensive benchmark of 26 vision datasets and compare our framework with the baselines using accuracy metric. We compare unsupervised transfer using TURTLE to baselines that differ in the amount of supervision they use. First, we compare TURTLE to HUME, a method that has recently shown state-of-the-art unsupervised learning performance and surpassed traditional deep clustering approaches. Next, to explore how far can we go with unsupervised transfer, we compare TURTLE in a challenging setting to zero-shot transfer, unsupervised prompt tuning and supervised baselines. All these baselines use some form of supervision compared to TURTLE which is fully unsupervised.

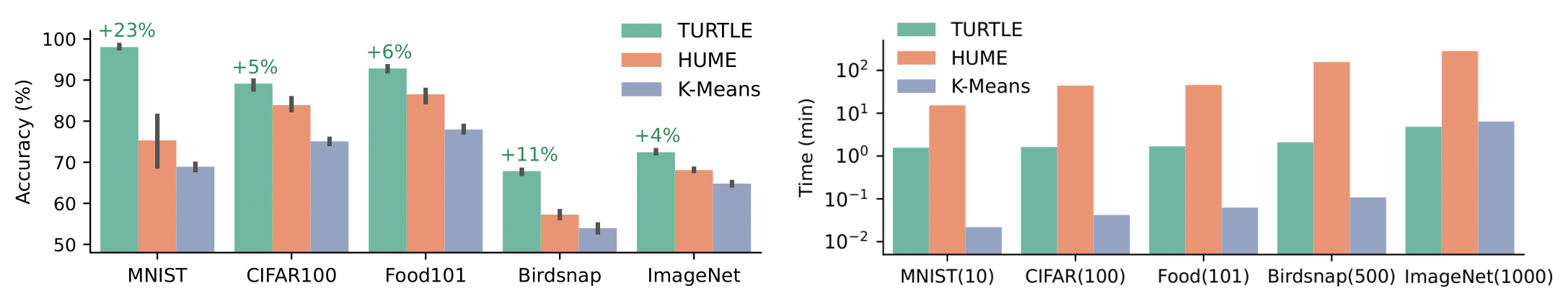

TURTLE shows the state-of-the-art unsupervised performance

We start by comparing TURTLE to the improved version of HUME (see details in our paper). Both TURTLE and HUME use the same representation spaces of CLIP ViT-L/14 and DINOv2 foundation models. In addition, we compare TURTLE to the K-Means clustering on top of concatenated embeddings from CLIP ViT-L/14 and DINOv2. The K-Means clustering serves as the simple unsupervised transfer baseline since, like TURTLE, it does not require task-specific representation learning. As shown in Figure below, TURTLE substiantially outperforms HUME on all considered datasets, confirming that maximizing margin in spaces of multiple foundation models to search for the underlying human labeling is the effective design choice. Remarkably, TURTLE leads to 23% and 11% absolute improvements (30% and 18% relative improvement) on the MNIST and Birdsnap datasets, respectively. Furthermore, among other datasets, TURTLE sets the new state-of-the-art unsupervised performance on the ImageNet dataset, achieving 72.9% accuracy and outperforming the previous state-of-the-art by 5.5%. In addition, we validate optimization efficiency of TURTLE and compare training time between all the considered methods. The results corroborate the use of first-order hypergradient approximation in TURTLE (see details in our paper). Notably, TURTLE achieves 10x speedup compared to HUME, achieving the impressive training time on the ImageNet dataset that takes less than five minutes. Overall, our results show that TURTLE effectively addresses the challenges of unsupervised transfer and outperforms unsupervised baselines by a large margin.

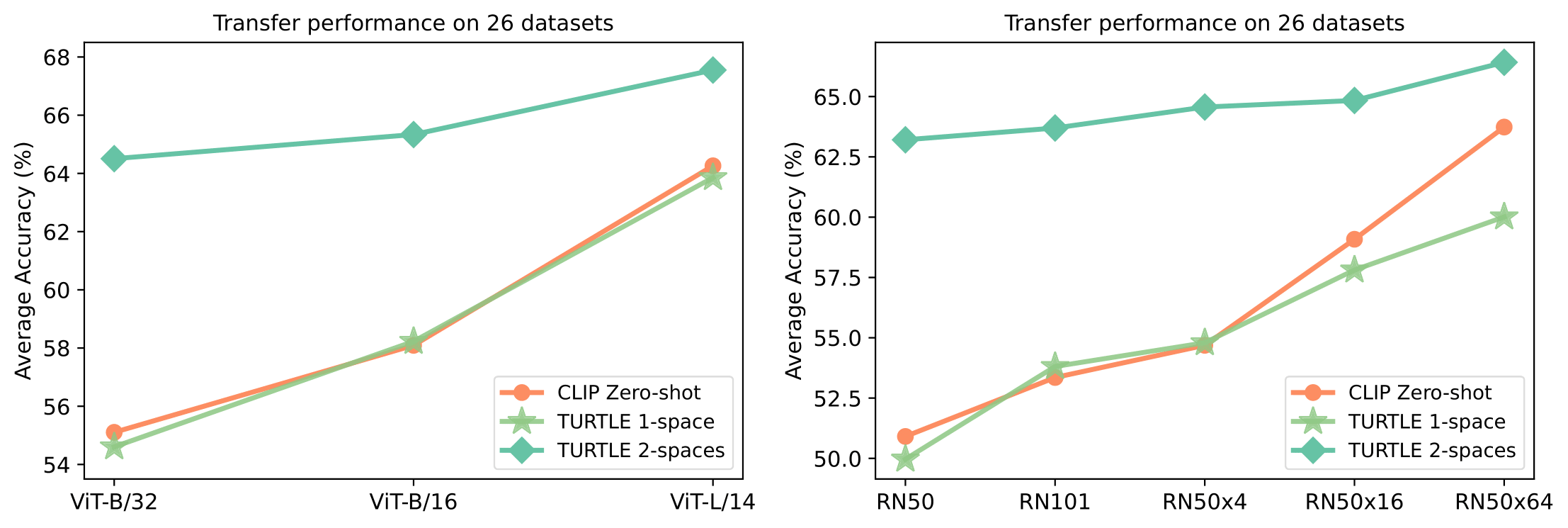

Unsupervised transfer with TURTLE outperforms CLIP zero-shot transfer

Next, we compare TURTLE to the CLIP zero-shot transfer that uses descriptions of ground truth classes as a form of supervision. Remarkably, without using any supervision, TURTLE 2-spaces outperforms the zero-shot transfer of CLIP by a large margin across 26 benchmark datasets for different ViT and ResNet backbones. In particular, TURTLE 2-spaces outperforms CLIP zero-shot by 9%, 7% and 4% absolute improvement (17%, 12% and 5% relative improvement) with ViT-B/32, ViT-B/16 and ViT-L/14 backbones, respectively. Similarly, TURTLE 2-spaces outperforms CLIP zero-shot by 12%, 10%, 10%, 6% and 3% absolute improvement (24%, 19%, 18%, 10% and 4% relative improvement) with ResNet50, ResNet101, ResNet50x4, ResNet50x16 and ResNet50x64 backbones, respectively. Moreover, even TURTLE 1-space matches the performance of CLIP zero-shot across all studied ViT backbones and 4 out of 5 ResNet backbones. It is important to note that both CLIP zero-shot and TURTLE 1-space are linear models in the same representation space and differ only in the amount of supervision which is available to produce the weights.

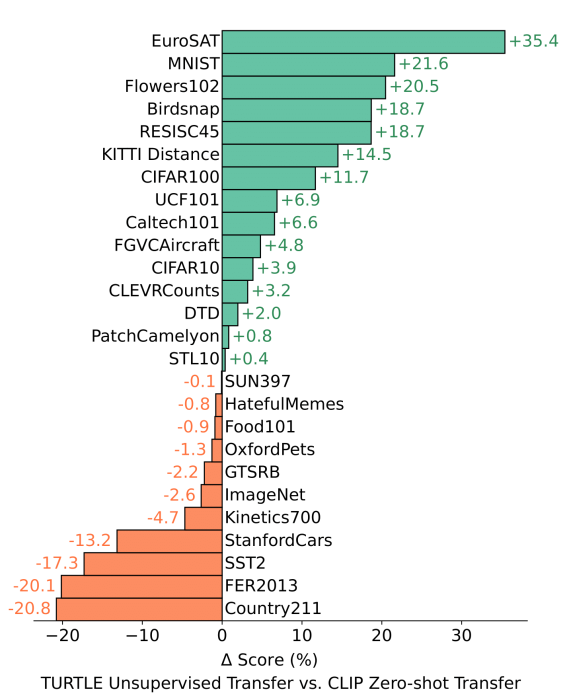

When comparing performance on individual datasets, TURTLE with CLIP ViT-L/14 and DINOv2 foundation models outperforms CLIP zero-shot transfer on 15 out of 26 datasets with remarkable absolute gains of 35%, 21% and 20% (58%, 28% and 26% relative improvement) on the EuroSAT, MNIST and Flowers102 datasets, respectively.

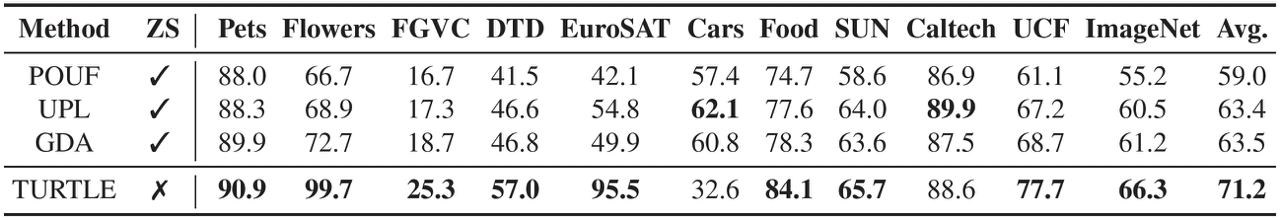

Unsupervised transfer with TURTLE outperforms unsupervised prompt tuning

Next, we compare TURTLE to the state-of-the-art unsupervised prompt tuning baselines. These approaches enhance class prototypes defined by the CLIP zero-shot classifier via unsupervised adaptation on the downstream task. We follow previous works and use CLIP ResNet-50 representations for all methods. Although being fully unsupervised, TURTLE consistently outperforms all the considered baselines by a large margin. Specifically, TURTLE achieves 8% absolute improvement (12% relative improvement) in average accuracy over the best unsupervised prompt tuning baseline. On the Flowers102 and EuroSAT datasets, our framework attains outstanding absolute gains of 27% and 41% (37% and 75% relative improvement), respectively. Overall, these results demonstrate the surprising effectiveness of the unsupervised transfer.

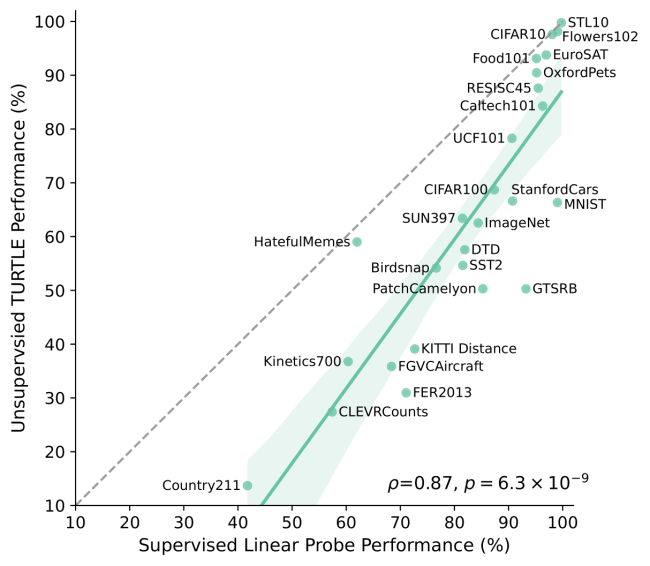

TURTLE can achieve optimal unsupervised transfer performance given high-quality representations

Finally, we compare TURTLE 1-space ViT-L/14 to supervised linear probe in the same representation space. This means that in this setup both models are linear in the representation space of CLIP ViT-L/14 and differ only in the amount of supervision utilized to produce the weights. Supervised linear probe is trained using all available labels. Consequently, we can assume that it represents the maximal transfer learning performance that can be achieved by the unsupervised transfer. We observe a high positive correlation of 0.87 between unsupervised transfer performance and its fully supervised counterpart. This result indicates that with better supervised linear probe performance, TURTLE’s performance may also increase (which we further investigate in our paper). Notably, TURTLE approaches the optimal transfer performance on the STL10, CIFAR10, Flowers102, Food101 and HatefulMemes, demonstrating that labels may not be needed when given sufficiently high-quality representations, as measured by supervised linear probe.